Introduction to Computers

By Paribesh Sapkota

Introduction:

Computers are more than simply devices in the modern world. They are an inseparable part of our life, our partners in work, recreation, and education. However, let’s start with the fundamentals before delving into the amazing uses and complex inner workings:

What is a computer?

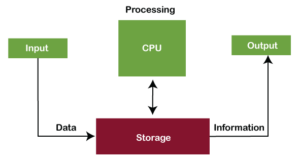

An electrical device with data processing, program execution, and data storage capabilities is called a computer. It receives information, processes and calculates as directed, and outputs the results. Think of it as a powerful device that can compute data, produce eye-catching graphics, and even gain knowledge from mistakes!

An analog computer

what is it?

An analog computer is one that uses continuous-time signals to function, meaning that it receives input and outputs signals that change over time. The earliest type of computer that is still in use today is the analog computer. These were some of the most widely used computers of the 1950s and 1960s.Analog computers are slower, less dependable, and less efficient than digital computers. A little and constrained quantity of data and information may be stored on these computers. Analog computers are employed in certain applications as operating them requires knowledge of science and signal processing.

A digital computer

what is it?

A digital computer is one that uses discrete-time signals, sometimes known as discontinuous-time signals. Digital electrical components such as transistors, digital ICs, logic gates, and so on make up a digital computer. These computers carry out computations and other tasks using the binary number system. A standard digital computer takes input in the form of binary signals, or 0s and 1s, processes them in accordance with user instructions, and then outputs the results using a digital display screen in a way that is legible by the user. The first digital computer was created in the 1940s to do simple numerical computations, and it has since made several technological improvements in the computing industry.

What are the key characteristics of computers?

- Speed:

- Processing Power: The speed of modern computers is measured in terms of the number of calculations they can perform per second. This processing power enables them to execute complex algorithms, simulations, and computations swiftly.

- Multitasking: Computers can handle multiple tasks simultaneously, efficiently switching between processes without a significant loss in performance.

- Accuracy:

- Binary Representation: Digital computers use a binary system (0s and 1s) for data representation. This binary representation ensures precise and accurate processing, reducing errors in calculations and data manipulation.

- Error Correction: Advanced algorithms and error-checking mechanisms contribute to the overall accuracy of digital systems.

- Versatility:

- Software Compatibility: Computers are versatile due to their ability to run a wide range of software applications. From word processing and graphic design to scientific research and gaming, computers adapt to diverse user needs.

- Programming Flexibility: The programmability of computers allows users to develop and run custom software, expanding their functionality to suit specific requirements.

- Storage:

- Data Retention: Computers use various storage devices, such as hard drives and solid-state drives, to retain vast amounts of data persistently. This includes the operating system, software applications, and user-generated content.

- Data Accessibility: Quick access to stored information enables efficient retrieval and manipulation of data, contributing to the overall utility of computers.

- Connectivity:

- Networking: Computers can connect to each other through local area networks (LANs) or the internet. This connectivity enables data sharing, communication, and collaboration on a global scale.

- Cloud Computing: The ability to access and store data in the cloud enhances collaboration and ensures that information is available from anywhere with an internet connection.

History of Computers:

The early humans used the earliest counting apparatus. As counting implements, they utilized sticks, stones, and bones. More computer gadgets were created over time as advancements in human cognition and technology continued. Below is a description of some of the most well-known computer equipment, ranging from the earliest to the most modern;

Abacus

The invention of the abacus, which is regarded as the earliest computer, is when the history of computers began. Around 4,000 years ago, the Chinese are credited with creating the abacus. It was a wooden rack with metal rods attached to which were beaded.

parts of Abacus

- Beads: Positioned on rods or wires, beads are the physical elements used for counting and representing numerical values. The beads in the lower section typically denote units, while those in the upper sections represent higher place values such as tens, hundreds, and so forth.

- Rods or Wires: These are the vertical columns or wires on the abacus frame where the beads are threaded. Each rod corresponds to a specific place value, creating a structure that allows for the representation of numbers in a place-value system.

This tool’s picture is displayed below;

Napier’s Bones:

Napier’s Bones is a historically significant manually-operated calculating device invented by John Napier (1550-1617) of Merchiston. This calculating tool utilizes nine different ivory strips or bones, each marked with numbers, to facilitate multiplication and division.

key features:

- Division and Multiplication: Napier’s Bones were created to make the division and multiplication operations simpler. The numbered strips might be aligned by users to quickly complete these computations.

- Decimal Point: Unexpectedly, Napier’s Bones is acknowledged as the decimal point’s first machine. The correct representation and manipulation of decimal numbers were made possible by this discovery.

- Operational Mechanism: The numbers being multiplied were used to position the bones in order to conduct multiplication. After then, the aligned strips may be used to read the findings. In a similar way, the bones were employed for division, which helped determine the quotient.

- Historical Significance: Napier’s Bones played a pivotal role in advancing mathematical computation during a time when manual methods were predominant. The device showcased Napier’s ingenuity in simplifying complex calculations.

Pascaline:

The Pascaline, also known as the Arithmetic Machine or Adding Machine, is an early mechanical calculator. It was invented between 1642 and 1644 by the French mathematician-philosopher Blaise Pascal (not “Biaise Pascal”).The Pascaline played a crucial role in the history of computing, showcasing the potential for automating mathematical processes. While it had limitations, its invention marked a significant step towards the development of mechanical devices that would later evolve into more advanced calculators and, eventually, modern computers.

key features

- invention: In order to help his father, a tax collector, who need a more effective method of performing computations, Blaise Pascal devised the Pascaline.

- Purpose: The primary purpose of the Pascaline was to automate the process of addition and subtraction, making mathematical computations more accurate and efficient.

- Mechanical Workings: A set of gears and cogs allowed the Pascaline to function. Dialing in numbers allowed users to program the system to add or subtract automatically.

- Limited Functionality: Each Pascaline computer could handle up to eight numbers, and its sole functions were addition and subtraction.

- Production: Pascal created a few Pascaline prototypes and few commercially available devices, mostly for bookkeeping applications.

Stepped Reckoner or Leibniz Wheel:

German mathematician and philosopher Gottfried Wilhelm Leibniz created the Stepped Reckoner, widely referred to as the Leibniz Wheel, in 1673. Leibniz developed a more sophisticated calculator by building upon Blaise Pascal’s previous creation, the Pascaline.

key features:

- Invention: The Stepped Reckoner was created by Gottfried Wilhelm Leibniz as an upgrade to Blaise Pascal’s previous mechanical calculator, the Pascaline.

- Digital Mechanical Calculator: Originally called the Stepped Reckoner, this electronic calculator was made to carry out mathematical operations automatically. Instead of using analog components, it ran on a digital mechanism.

- Fluted Drums: The Stepped Reckoner made use of fluted drums as opposed to gears, like in Pascal’s Pascaline. These drums, with their own sets of graduated notches, made calculations accurate and effective.

- Division and Multiplication: The Stepped Reckoner’s capacity to execute both division and multiplication enhanced its usefulness when compared to its predecessors.

- Decimal Number System: The device was made to function with the decimal number system, which makes handling multi-digit numbers possible.

Difference Engine: The kind of mechanical automated calculators called difference engines are made specifically to compute and tabulate the polynomial function. It can use the tiny sets of coefficients to compute in a manner that tabulates the polynomial functions. Distinctive Mechanism Charles Babbage—dubbed the “Father of Modern Computer”—designed it in the early 1820s.

Benefits of the Differentiation Engine:

- Accuracy: The Difference Engine was intended to be extremely precise and exact, able to carry out intricate mathematical computations with great accuracy.

- Speed: The machine was an invaluable tool for scientific research, engineering, and other disciplines requiring quick computing since it could do computations far quicker than people could.

- Automation: The Difference Engine was created to be completely automated, which removes the need for manual computation and lowers the likelihood of mistakes.

The Difference Engine has some drawbacks.

- Restricted functionality: The Difference Engine could not be simply modified to handle different jobs since it was built to carry out a certain set of computations.

- Complexity: The machine was difficult to construct and maintain since it was very expensive to develop and extremely complicated.

- Insufficient funds: Babbage had trouble obtaining the project’s necessary funds, which finally caused it to be shelved.

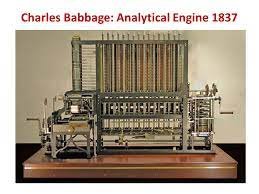

Analytical Engine

Analytical engine Is a fully controlled general-purpose computer which includes automatic mechanical digital computer into it. Any of the calculation set is being programmed with the help of punch cards. It also includes integrated memory and programs flow control and also ALU into it. It is the first general mechanical computer system were Any of the other finite calculations are being carried or performed by it.

Advantages of the Analytical Engine:

- Flexibility: Unlike the Difference Engine, the Analytical Engine was designed to be programmable, which gave it a much wider range of functionality and made it adaptable to a variety of tasks.

- Storage: The machine had a “memory” in the form of punched cards, which allowed it to store and recall data for future use.

- Potential for automation: The Analytical Engine had the potential to be fully automatic, which would have made it even more efficient and accurate than the Difference Engine.

disadvantage:

- Complexity: The Analytical Engine was even more complex than the Difference Engine, which made it even more expensive to build and maintain.

- Lack of funding: Babbage struggled to secure funding for the project, which ultimately led to its abandonment.

- Technological limitations: The technology of the time was not advanced enough to fully realize the potential of the Analytical Engine, which made it difficult to build and test.

Tabulating Machine

It was invented in 1890, by Herman Hollerith, an American statistician. It was a mechanical tabulator based on punch cards. It could tabulate statistics and record or sort data or information. This machine was used in the 1890 U.S. Census. Hollerith also started the Hollerith Tabulating Machine Company which later became International Business Machine (IBM) in 1924.

Differential Analyzer

It was the first electronic computer introduced in the United States in 1930. It was an analog device invented by Vannevar Bush. This machine has vacuum tubes to switch electrical signals to perform calculations. It could do 25 calculations in few minutes.

Mark I

The next major changes in the history of computer began in 1937 when Howard Aiken planned to develop a machine that could perform calculations involving large numbers. In 1944, Mark I computer was built as a partnership between IBM and Harvard. It was the first programmable digital computer.

Generations of Computers:

Computers are often classified into generations based on their technological advancements. The five generations of computers include:

1. First Generation (1940s-1950s)

The first generation of computers is generally known as electromechanical computers or using vacuum tubes. For example, ENIAC has used Vacuum tubes, relied on Machine Language and Boolean logic. A computer using vacuum tubes is very slow in executing programs compared to present-day computers.

What is a Vacuum Tube?

An electron tube could be a vacuum tube or valve is a device that controls the flow of electrical current during a high vacuum between electrodes to that an electrical potential has been applied.

Characteristics:

- Technology: Vacuum tube technology was the foundation of first-generation computers.

- Size: Computers of this era were large, occupying considerable space.

- Power Consumption: They consumed significant amounts of electrical power.

- Heat Generation: Vacuum tubes produced substantial heat during operation.

Merits:

- Pioneering Era: First-generation computers marked the beginning of electronic computing, representing a groundbreaking shift from manual to automated computation.

- Binary System: These computers utilized the binary system for processing information, laying the foundation for future computing systems.

- Programmability: They were programmable machines, allowing users to perform a variety of calculations.

Demerits:

- Size and Weight: The computers were large and heavy, requiring dedicated rooms for installation.

- Heat Issues: Vacuum tubes generated a substantial amount of heat, necessitating cooling systems and making the environment uncomfortable.

- Reliability: Vacuum tubes were prone to frequent failures, impacting the reliability of these early machines.

- Maintenance: Maintenance was labor-intensive, as faulty vacuum tubes needed to be replaced regularly.

- Few Examples are:

- ENIAC

- EDVAC

- UNIVAC

- IBM-701

- IBM-650

2. Second Generation (1950s-1960s): Transistors

The second generation of computers spans the period from the late 1950s to the mid-1960s. During this era, significant advancements were made in computing technology, moving away from vacuum tube-based systems to the utilization of transistors.

Transistors were a key technological innovation and a fundamental component used in second-generation computers. In this era (late 1950s to mid-1960s), transistors replaced the vacuum tubes that were prevalent in first-generation computers.

Key characteristics of second-generation computers include:

- Transistor Technology:

- The major innovation of second-generation computers was the replacement of vacuum tubes with transistors. Transistors were more reliable, smaller in size, generated less heat, and consumed less power.

- Reduction in Size:

- The adoption of transistors allowed for a significant reduction in the size of computers. Second-generation machines were smaller, more compact, and required less physical space compared to their first-generation counterparts.

- Decreased Power Consumption:

- Transistors consumed less power than vacuum tubes, contributing to a reduction in overall power consumption. This made second-generation computers more energy-efficient.

- Magnetic Core Memory:

- Magnetic core memory, a more reliable and faster form of memory, replaced the earlier magnetic drum memory used in first-generation computers. This improvement enhanced overall system performance.

- Assembly Language Programming:

- While second-generation computers still primarily used low-level assembly languages, some systems introduced higher-level programming languages, making programming more accessible and efficient.

- Punched Card Input/Output Continued:

- Punched cards remained a common method for data input and output in second-generation computers, but new technologies, such as magnetic tape and disk drives, started to emerge.

Advantages of second-Generation Computers:

- Enhanced Reliability: Transistors improved the overall dependability of the system.

- Smaller Size: Computers that are more space-efficient and compact are made possible by transistors.

- Reduced Power Consumption: Transistors reduced power consumption, which improved energy efficiency.

- Enhanced Performance and Speed: Processing speed was enhanced by developments in magnetic core memory and transistor technology.

- Magnetic Core Memory: A quicker and more dependable type of memory was introduced.

- Creation of Higher-Level Programming Languages: By introducing higher-level languages, several systems were able to increase programming productivity.

Drawbacks of Computers of the Second Generation:

- Restricted Memory Capacity: The amount of RAM that could be used in computers from the second generation was limited.

- Challenges with Maintenance: Despite becoming more dependable, transistor-related problems still needed maintenance.

- Systems for Batch Processing: Many of them lacked real-time interaction and instead worked in batches.

- Methods of Input/Output: Punch cards continued to be a popular method, which limited their efficiency.

- Complexity of Programming: Higher-level languages were not as common, and programming still needed knowledge of hardware specifications.

- Few Examples are:

- Honeywell 400

- IBM 7094

- CDC 1604

- CDC 3600

- UNIVAC 1108

3. Third Generation (1960s-1970s): Integrated circuits

Third generation computers are advance from first and second generation computers. The third generation computer was started in 1965 and ended around 1971. Third generation computers start using integrated circuits instead of transistors. The integrated circuit (IC) is a semiconductor material, that contains thousands of transistors miniaturized in it. With the help of IC, the computer becomes more reliable, fast, required less maintenance, small in size, generates less heat, and is less expensive. The third generation computers reduce the computational time. In the previous generation, the computational time was microsecond which was decreased to the nanosecond. In this generation, punch cards were replaced by mouse and keyboard. Also, multiprogramming operating systems, time-sharing, and remote processing were introduced in this generation. The high level programming language such as BASIC, PASCAL, ALGOL-68, COBOL, FORTRAN – II was used in third generation computers.

Third-generation computers’ characteristics include:

- Dependability and Effectiveness: In comparison to earlier generations, third-generation computers were more dependable, quick, and effective.

- Cost-Effectiveness: The mass manufacturing of integrated circuits allowed them to be produced at a lower cost, which increased the economic viability of computers.

- Sizing Down: Space efficiency was enhanced by the adoption of integrated circuits, which made computers smaller.

- Programming languages at a high level: Programming became more accessible with the widespread usage of high-level languages like PL/1, ALGOL-68, COBOL, FORTRAN-II, PASCAL, and BASIC.

- Devices of Input: More approachable input devices like keyboards and mouse took the role of punch cards. The technology of integrated circuits Individual transistors were replaced with integrated circuits, which enhanced overall performance and increased component density.

- Greater Capacity for Storage: Larger volumes of data may be stored on third-generation computers because to their enormous storage capacities.

perks of computers from the third generation

- Space Effectiveness: Computers may now be smaller because to integrated circuits, which saves space.

- Decrease in Heat and Energy Usage: Third-generation computers were more energy-efficient and less likely to experience hardware failure since they generated less heat and used less energy when operating.

- Shift to Current Input Devices: Punch cards gave way to keyboards and mouse, which streamlined input processes and enhanced user engagement.

- Elevated Accuracy and Storage Capability: The computing capabilities of these computers were improved by their large store capacity and more precise results.

- Mobility and Quickness: Better processing speed and more portability were features of third-generation computers.

Disadvantages of Third Generation Computers:

- Need for Air Conditioning: Despite advancements, air conditioning was still required to maintain optimal operating conditions.

- Sophisticated Technology for IC Manufacturing: Highly sophisticated technology was required for the manufacturing of integrated circuits.

- Maintenance Challenges: Maintaining IC chips could be challenging, potentially leading to difficulties in repairing or replacing components.

4. Fourth Generation (1970s-1980s): Microprocessors

Fourth-generation computers were introduced in 1972, following the debut of third-generation computers, which mostly used microprocessors. These computers made use of Very Large Scale Integrated (VLSI) circuit technology. They were called microprocessors as a result. Thousands of integrated circuits are placed on a single chip called a silicon chip to form a microprocessor.This generation of computers had the first “supercomputers” that could perform many calculations accurately also they used networking and higher and more complicated languages as their inputs like C, C+, C++, DBASE, etc.

Characteristics of Computers in the Fourth Generation

The characteristics of fourth-generation computers are as follows:

- In a microprocessor-based system, Very Large Scale Integrated (VLSI) circuits are utilized.

- Microcomputers became the most cheap device in this generation.

- Both the cost and appeal of handheld computers have increased.

- Networking between systems was developed and grew widespread in this era.

- The amount of memory and other storage devices that are readily available has increased significantly.

- The results are now more accurate and consistent.

- The speed, or processing power, has increased dramatically.

- Massive programs started to be employed as storage systems’ capacity increased.

- Significant progress in hardware contributed to the enhancement of output such as screens and papers.

- Several high-level languages were created in the fourth generation, including C, FORTRAN, COBOL, PASCAL, and BASIC.

Following are some advantages of fourth-generation computers:

- They were designed to be used for a wide range of purposes (general-purpose computers).

- Smaller and more dependable than previous generations of computers.

- There was very little heat generated.

- In many circumstances, the fourth-generation computer does not require a cooling system.

- Portable and less expensive than previous versions.

- Computers from the fourth generation were significantly quicker than those from previous generations.

- The Graphics User Interface (GUI) technology was used to provide users with better comfort. During this time, PCs became more inexpensive and widespread.

- Repair time and maintenance costs are reduced.

Following are some disadvantages of fourth-generation computers:

- The fabrication of the ICs necessitated the use of cutting-edge technologies (Integrated Circuits).

- Only ICs can be made with a high-quality and reliable system or technology.

- Microprocessors must be manufactured using cutting-edge technology, which necessitates the use of a cooler (fan).

5. Fifth Generation (1980s-present): Artificial Intelligence and parallel processing

Computers of the fifth generation were released subsequent to the invention of the fourth generation. The development of artificial intelligence-based fifth-generation computers, or contemporary computers, is currently ongoing. FGCS (Fifth Generation Computer System) was created in Japan in 1982. This generation of computers has great processing power and parallel processing thanks to microelectronic technology. Modern high-level languages such as Python, R, C#, Java, and others are used as input methods. These are incredibly dependable and use the Ultra Large Scale Integration (ULSI) technology. War. Parallel processing hardware and artificial intelligence software are used in computers.

These computers are at the cutting edge of modern scientific computations and are being utilized to develop artificial intelligence (AI) software. Artificial intelligence (AI) is a popular discipline of computer science that examines the meaning and methods for programming computers to behave like humans. It is still in its infancy.

Following are some features of fifth-generation computers:

- The ULSI (ultra large scale integration) technology is used in this generation of computers.

- Natural language processing is now in its fifth phase of development.

- These PCs can be purchased for a lower price.

- Computers that are more portable and powerful.

- Computers are dependable and less expensive.

The benefits of fifth-generation computers are as follows:

- Comparing these computers to earlier models, they are far faster.

- Repairing these machines is easier.

- Compared to computers from previous generations, these computers are noticeably smaller.

- They are portable and low in weight.

- The development of true artificial intelligence is ongoing.

- It has advanced with parallel processing.

- Technology related to superconductors has advanced.

Following are some disadvantages of fifth-generation computers:

- They’re usually sophisticated but could be difficult to use.

- They can give businesses additional power to monitor your activities and potentially infect your machine.

Classification of Computers:

Computers can be classified into various categories based on different criteria. Here are common classifications of computers:

1. Based on Size and Performance:

– Supercomputers: Supercomputers, which are built to handle incredibly complicated computations and simulations that need unmatched processing speed and performance, are the supreme level of computing capability. When enormous computing power is needed, these devices are usually employed in engineering, science, and research applications.

Features:

- Processing Capacity: Supercomputers are distinguished by their extraordinary processing capacity, which can do trillions of computations per second (in the range of teraflops to petaflops).

- In parallel: By using parallel processing, they may divide large, complicated jobs into smaller, concurrently processing subtasks that can be handled by several processors. Their tremendous processing efficiency is a result of this parallelism.

- Focused Architecture: Supercomputers frequently have specialized architectures that are tuned for particular kinds of computations, such scientific modeling, simulations, and floating-point operations.

- Personalized Hardware: Supercomputer hardware is frequently specially designed to fulfill the demands of the machine. Specialized CPUs, fast interconnects, and cutting-edge cooling systems could be examples of this.

- Large-Scale Memory: Large, fast memory systems enable supercomputers to manage enormous datasets and provide quick access to data.

Advantages of Supercomputers:

- Supercomputers perform calculations at incredibly high speeds, facilitating rapid data processing and simulations.

- Supercomputers excel in handling intricate simulations for weather forecasting, climate modeling, and fluid dynamics.

- They assist in financial modeling, risk analysis, and algorithmic trading in the financial sector.

Disadvantages of Supercomputers:

- Supercomputers require large amounts of electrical power, contributing to high energy consumption and operational expenses.

- Supercomputers are physically large and require dedicated facilities with robust cooling systems, posing challenges in terms of space.

- Maintenance and repairs of supercomputers can be intricate, requiring specialized technicians and resources.

Examples of Supercomputers:

- IBM Summit and Sierra, Cray XT5 “Jaguar,” Fugaku (developed by RIKEN and Fujitsu) are examples of supercomputers that have held top positions in the TOP500 list of the world’s most powerful supercomputers.

– Mainframe Computers: A mainframe computer is a large, high-performance computing system that serves as the backbone for processing and managing vast amounts of data in critical business applications. These machines are designed for reliability, scalability, and the simultaneous execution of numerous tasks. Mainframes have been a crucial part of enterprise computing for decades.

Key Characteristics:

1. Processing Power: Mainframes are known for their substantial processing power, capable of handling extensive data processing and transactional workloads.

2. Scalability: They can scale up to support increasing workloads without sacrificing performance, making them suitable for large-scale, mission-critical applications.

3. Reliability and Availability: Mainframes are engineered for high reliability and availability, minimizing downtime and ensuring continuous operation.

4. Data Management: Mainframes excel in managing and processing vast amounts of data, making them ideal for applications like database management, transaction processing, and data analytics.

5. Security: Mainframes prioritize security features to protect sensitive data, transactions, and applications. They often include robust access controls and encryption.

Examples of Mainframe Systems:

– IBM zSeries (System z)

– Unisys ClearPath

Advantages of Mainframe Computers Over Supercomputers:

- Mainframes are designed for general-purpose computing and can handle a wide range of applications, making them versatile for various business and organizational needs.

- Mainframes excel in multi-tasking, supporting multiple users and applications concurrently.

- Mainframes prioritize security features, providing robust access controls and encryption to protect sensitive data.

- Mainframes can scale up to accommodate growing workloads efficiently, making them suitable for evolving business needs.

Disadvantages of Mainframe Computers Compared to Supercomputers:

- Mainframes may not match the processing speed of supercomputers for such specialized tasks.

- Mainframes, while efficient, may not achieve the same level of energy efficiency as supercomputers in specialized calculations.

- Mainframes may not offer the same level of performance as supercomputers in highly specialized scientific computing applications.

Minicomputers: A mini computer, also known as a midrange computer, is a type of computer that falls between the size and processing power of mainframe computers and microcomputers (personal computers). Mini computers have been used for a range of applications, offering a balance between performance, cost, and size.

Key Characteristics:

1. Moderate Processing Power: Mini computers provide moderate processing power, generally more powerful than microcomputers but less than mainframes or supercomputers.

2. Compact Size: They are smaller in size compared to mainframes, making them suitable for environments with limited space.

3. Cost-Effective: Mini computers offer a cost-effective solution for businesses and organizations that need more computing power than microcomputers but don’t require the capabilities of a mainframe.

4. Multi-User Support: They support multiple users simultaneously, making them suitable for tasks requiring shared computing resources.

5. Reliability: Mini computers are designed for high reliability and availability, often including features to minimize downtime.

6. Versatility: They are versatile and can be used for a range of applications, including business and scientific computing.

Examples of Mini Computer Systems:

– DEC PDP (Programmed Data Processor) series

– VAX (Virtual Address eXtension) series

Advantages of Mini Computers Over Mainframes:

- Mini computers are generally more cost-effective than mainframes, making them suitable for businesses with budget constraints.

- Mini computers are often easier to maintain compared to mainframes, with simpler hardware configurations and maintenance requirements.

- Mini computers are versatile and can be adapted to a range of applications, making them suitable for businesses with diverse computing needs.

Disadvantages of Mini Computers Compared to Mainframes:

- While suitable for mid-sized organizations, mini computers may face scalability challenges in meeting the demands of large enterprises with extensive computing requirements.

- Mini computers may struggle to efficiently handle extremely large databases or datasets that require the robust processing capabilities of mainframes.

- Mini computers may lack some of the advanced features and capabilities found in mainframes, limiting their performance in certain specialized areas.

Microcomputers:

Microcomputers, also known as personal computers (PCs), are small-sized computing devices that have become ubiquitous in both personal and business settings. They represent a category of computers that includes desktop computers, laptops, and smaller devices designed for individual use.

Key Characteristics:

- This allows for portability and flexibility in placement.

- processing power.

- They can be used for general-purpose computing tasks, making them suitable for personal, educational, and business use.

- Microcomputers include traditional desktop computers, which consist of a separate monitor, CPU (Central Processing Unit), keyboard, and mouse.

- This affordability has contributed to their widespread adoption.

2. Based on Purpose:

– Personal Computers (PCs):

Personal Computers (PCs) are computing devices designed for individual use and are commonly found in households, educational institutions, and businesses. They are versatile machines that cater to a wide range of computing needs, from general productivity tasks to entertainment and specialized applications. PCs are designed for personal use, allowing individuals to have direct control over the computing resources for tasks such as work, communication, and entertainment .PCs are widely used for productivity tasks, including document creation, spreadsheet analysis, presentations, and other office applications.

key characteristics:

- PCs are designed for personal use, allowing individuals to have direct control over the computing resources for tasks such as work, communication, and entertainment.

- PCs are versatile and can handle various applications, including word processing, internet browsing, multimedia playback, gaming, and more.

- PCs have become an integral part of modern households and businesses. They facilitate communication, work, education, and entertainment, contributing to increased productivity and connectivity.

– Workstations:

Workstations are high-performance computing devices designed for specialized tasks that demand substantial processing power, memory, and graphics capabilities. These machines are optimized to handle complex applications in fields such as graphics design, engineering, scientific research, and content creation. Workstations are tailored for specific professional tasks, including graphic design, 3D modeling, engineering simulations, scientific research, and other computationally intensive applications. Workstations come with ample memory (RAM) to handle large datasets and complex computations. This ensures smooth performance when working with intricate designs or simulations. Workstations are built for reliability and stability. This is particularly important in professional settings where downtime can be costly, and tasks are often time-sensitive.

– Servers:

Servers are specialized computers designed to provide services, resources, or data to other computers, known as clients, within a network. They play a central role in facilitating communication, sharing resources, and delivering specific functionalities to users or other devices connected to the network. Servers are dedicated to providing specific services, resources, or functionalities to client devices within a network. These services can include file storage, web hosting, email, databases, and more. Servers are equipped with multiple network interfaces or connections to ensure efficient communication with other devices on the network. They often have high-speed and reliable network connections.

3. Based on Usage:

– General-Purpose Computers:

General-purpose computers are versatile computing devices designed to handle a broad spectrum of tasks and applications. They are characterized by their flexibility, allowing users to perform various activities, ranging from productivity and entertainment to communication and creative endeavors.

Advantages:

- Versatility:

- General-purpose computers offer versatility, allowing users to perform a wide range of tasks without the need for specialized hardware.

- Accessibility:

- These computers are designed to be accessible to a broad user base, including individuals with varying levels of technical expertise.

- Ubiquity:

- General-purpose computers are widely available and used across diverse settings, from homes and offices to educational institutions.

- Cost-Effectiveness:

- In many cases, general-purpose computers are cost-effective, providing a balance between performance and affordability.

Disadvantages:

- Performance Limitations:

- While versatile, general-purpose computers may not excel in specific tasks compared to specialized devices designed for those tasks.

- Not Optimized for Specialized Applications:

- For certain professional or resource-intensive tasks (e.g., high-end gaming, professional video editing), specialized hardware configurations may be more suitable.

Special-Purpose Computers:

Special-purpose computers are designed with a focus on performing specific tasks or functions within particular industries or applications. Unlike general-purpose computers, which are versatile and can handle a broad range of tasks, special-purpose computers are tailored to excel in a specific area, often providing optimized performance for dedicated functions. Special-purpose computers are engineered with a primary focus on fulfilling specific tasks, functions, or operations. Many special-purpose computers are embedded within devices, machines, or systems to control and manage specific functions seamlessly.

Advantages:

- Optimized Performance:

- Special-purpose computers excel in their designated tasks, offering optimized performance for specific applications.

- Efficiency:

- These computers are often more efficient in terms of power consumption and processing speed for their intended functions.

- Reliability:

- Special-purpose computers are engineered for reliability and stability in performing the tasks they are designed for.

- Tailored to Industry Needs:

- Designed to meet the unique requirements of specific industries, special-purpose computers address the challenges and demands of those sectors.

Disadvantages:

- Limited Versatility:

- Special-purpose computers lack the versatility of general-purpose computers and may not be suitable for tasks outside their designated scope.

- Cost:

- Developing and manufacturing special-purpose computers can be expensive, and their use may be limited to specific industries or applications.

4. Based on Architecture:

RISC (Reduced Instruction Set Computing) Computers:

RISC (Reduced Instruction Set Computing) is a computer architecture design that emphasizes simplicity and efficiency by using a small and highly optimized set of instructions. RISC processors execute instructions with a focus on speed and efficiency, often sacrificing some versatility to achieve enhanced performance. RISC instructions are designed to be executed in a single clock cycle, promoting faster and more streamlined processing.

Advantages of RISC Computers:

-

- RISC architecture’s focus on simplicity and optimization results in high-speed execution of instructions, leading to improved overall performance.

- The reduced instruction set simplifies the design of the processor’s control unit, leading to more straightforward hardware implementations.

- Pipelining in RISC processors contributes to efficient instruction execution, enabling overlapping of stages for improved throughput.

- Simpler instruction decoding and execution processes in RISC architecture can lead to lower power consumption.

- RISC architecture is often scalable, allowing for easy integration of multiple processor cores for parallel processing.

Limitations of RISC Computers:

-

- RISC computers may have limitations in handling complex instructions and tasks, making them less versatile compared to CISC architectures.

- Achieving simplicity in instructions may lead to the need for more instructions to perform complex tasks, resulting in larger program sizes.

- The load/store architecture can result in additional instructions for moving data between registers and memory, causing potential overhead.

CISC (Complex Instruction Set Computing) Computers:

CISC (Complex Instruction Set Computing) is a computer architecture design that employs a larger and more diverse set of instructions. CISC processors aim to provide versatility and completeness in executing instructions, often at the cost of increased complexity in hardware design. CISC computers have a comprehensive and varied set of instructions, allowing them to handle a wide range of tasks. CISC processors include complex instructions that can perform multiple operations in a single instruction, reducing the number of instructions needed for certain tasks.

Advantages of CISC Computers:

-

- CISC architecture excels in handling a wide variety of instructions and tasks, making it suitable for diverse applications.

- Complex instructions in CISC architecture can reduce the number of instructions needed for certain tasks, leading to smaller program sizes.

- CISC processors can perform certain operations directly on memory, potentially reducing the need for explicit register-to-register operations

Limitations of CISC Computers:

-

- The larger instruction set and complex instructions in CISC architecture can result in intricate hardware design, potentially leading to increased manufacturing costs and longer development times.

- The complexity of instructions may lead to longer execution times, affecting the clock speed and overall performance of CISC processors.

- The complexity of CISC instructions may result in higher power consumption compared to RISC architectures.

5. Based on Brand and Model:

– IBM-Compatible Computers:

IBM-Compatible computers, often referred to as “PCs” (Personal Computers), are based on the architecture pioneered by International Business Machines (IBM). The term typically denotes computers that adhere to the standards set by IBM’s original PC design, which became a widely adopted industry standard. IBM-Compatible computers encompass a broad range of brands and models that adhere to these compatibility standards.BM-Compatible computers follow the hardware and software architecture introduced by IBM with its original Personal Computer (PC) in the early 1980s. IBM-Compatible computers typically include expansion slots, such as PCI and PCIe, to allow users to add additional hardware components like graphics cards, sound cards, and network cards. Most IBM-Compatible computers use processors based on the x86 architecture, including Intel and AMD processors. This architecture has become a standard for compatibility.

Apple Macintosh Computers:

Apple Macintosh computers, commonly known as Macs, are manufactured by Apple Inc. and operate on the macOS operating system. While Macs historically had a distinct architecture compared to IBM-Compatible computers, the transition to Intel processors in recent years has brought about some level of compatibility between the two platforms. Macintosh computers run the macOS operating system, which is designed and developed by Apple Inc. It features a user-friendly interface and is known for its stability and security. Apple’s recent Macs with Apple Silicon utilize a unified memory architecture, allowing the CPU and GPU to access the same pool of memory for improved performance. Macintosh computers are known for their sleek and minimalist design, incorporating features like Retina displays, thin form factors, and high-quality build materials.

6. Based on Portability:

– Desktop Computers:

– Stationary computers with separate monitor, keyboard, and processing unit.

– Laptops:

– Portable computers with integrated components.

– Tablets and Smartphones:

– Handheld devices with computing capabilities.

7. Based on Memory Hierarchy:

– Cache Memory:

Cache memory is a type of fast, small-sized memory that is located near the CPU. It serves as a temporary storage space for frequently accessed instructions and data, aiming to reduce the time it takes for the CPU to retrieve information. Cache memory provides rapid access to data and instructions, significantly reducing the latency in fetching information from slower main memory. Due to its proximity to the CPU, cache memory is limited in size. It typically consists of multiple levels (L1, L2, L3) with varying sizes and speeds.

– RAM (Random Access Memory):

RAM (Random Access Memory) is a type of volatile memory used by the computer for active programs and data during its operation. Unlike cache memory, RAM is larger in size and serves as a workspace for the CPU. RAM is volatile, meaning that it loses its contents when the power is turned off. It is used for storing data that is actively being processed by the CPU. it allows random access to any storage location, enabling quick read and write operations. This is in contrast to sequential access storage devices like hard drives.

– Storage (Hard Drives, SSDs):

Storage, which includes hard drives and Solid-State Drives (SSDs), represents non-volatile memory used for long-term storage of data, applications, and the operating system. Storage retains data even when the power is turned off, making it suitable for long-term storage. Storage devices have much larger capacities compared to cache memory and RAM. Hard drives offer higher capacities but at a slower speed, while SSDs provide faster access times.

Classification on the basis of data handling

- Analog : An analog computer is a form of computer that uses the continuously-changeable aspects of physical fact such as electrical, mechanical, or hydraulic quantities to model the problem being solved. Any thing that is variable with respect to time and continuous can be claimed as analog just like an analog clock measures time by means of the distance traveled for the spokes of the clock around the circular dial.

Advantages of using analogue computers:

- It allows real-time operations and computation at the same time and continuous representation of all data within the rage of the analogue machine.

- In some applications, it allows performing calculations without taking the help of transducers for converting the inputs or outputs to digital electronic form and vice versa.

- The programmer can scale the problem for the dynamic range of the analogue computer. It provides insight into the problem and helps understand the errors and their effects.

Example: An analog clock measures time using the continuously changing position of the clock hands around the circular dial.

Disadvantage:

- Limited Precision: Inherent limitations in precision due to continuous representation.

- Sensitivity to Noise: Susceptible to noise and external interference affecting accuracy.

- Limited Flexibility: Less versatile than digital computers, designed for specific applications.

- Difficulty in Programming: Complex programming, lack of standardized languages.

- Lack of Storage and Memory: Limited storage and memory capabilities compared to digital systems.

Digital : A computer that performs calculations and logical operations with quantities represented as digits, usually in the binary number system of “0” and “1”, “Computer capable of solving problems by processing information expressed in discrete form. from manipulation of the combinations of the binary digits, it can perform mathematical calculations, organize and analyze data, control industrial and other processes, and simulate dynamic systems such as global weather patterns.

Advantages of digital computers:

- It allows you to store a large amount of information and to retrieve it easily whenever you need it.

- You can easily add new features to digital systems more easily.

- Different applications can be used in digital systems just by changing the program without making any changes in hardware

- The cost of hardware is less due to the advancement in the IC technology.

- It offers high speed as the data is processed digitally.

- It is highly reliable as it uses error correction codes.

disadvantage:

- Data storage and retrieval depend on the availability and reliability of storage devices; potential risk of data loss or corruption.

- Continuous updates and additions may lead to compatibility issues, requiring careful management.

- Initial investment in digital systems may be high, especially for cutting-edge technology; maintenance and upgrades can also contribute to costs.

- Speed can be affected by factors such as system complexity, software efficiency, and hardware limitations.

- While error correction enhances reliability, it may not eliminate all potential sources of system failures or malfunctions.

Hybrid : A computer that processes both analog and digital data, Hybrid computer is a digital computer that accepts analog signals, converts them to digital and processes them in digital form.

Advantages of using hybrid computers:

- Its computing speed is very high due to the all-parallel configuration of the analogue subsystem.

- It produces precise and quick results that are more accurate and useful.

- It has the ability to solve and manage big equation in real-time.

- It helps in the on-line data processing.

disadvantages:

- Complex parallel processing may lead to increased heat generation and power consumption, potentially requiring efficient cooling solutions.

- Precision may be compromised if there are issues with the calibration or synchronization between the analog and digital components.

- Continuous on-line processing may pose challenges in terms of data integrity and potential errors if not properly managed.

These classifications provide a broad overview of the diverse range of computers available, each designed for specific purposes and user needs.

Data and Program Representation in Computers:

Data Representation:

-Binary Code: Computers represent data using a binary code system, which consists of the digits 0 and 1. Each binary digit is called a bit, and a group of 8 bits forms a byte. Binary code is the fundamental language used by computers to process and store information.

Data Types: Different types of data, such as integers, floating-point numbers, and characters, are encoded using binary representation. For example:

Integers may be represented using binary numbers (e.g., 1010 for decimal 10).

Floating-point numbers use a binary format to represent real numbers with a fractional part.

Characters are often encoded using character sets such as ASCII or Unicode, where each character is assigned a unique binary code.

– Representation Precision: The precision of data representation depends on factors like the number of bits used. More bits allow for a greater range of values and increased precision, especially in the case of floating-point numbers.

Program Representation:

Programming Languages: Programs are written in high-level programming languages, which use human-readable syntax and structures. Examples include languages like C, Java, Python, and others.

Compilation and Interpretation: High-level programming code is translated into machine code, which is the binary code that the CPU understands. This translation can occur through compilation (generating an executable file) or interpretation (translating code on-the-fly during execution).

Binary Representation: The machine code, which is in binary format, represents the program’s instructions and data. Each instruction is encoded as a sequence of binary digits that the CPU can execute.

Memory Storage: Once translated into machine code, the program is stored in the computer’s memory (RAM) during execution. The CPU fetches and executes these instructions sequentially.

Advantages of Binary Representation:

1. Simplicity: Binary representation simplifies electronic circuit design and processing, as electronic components can easily distinguish between two states (0 and 1).

2. Consistency: Binary representation provides a consistent and universally understood language for both data and program execution in computers.

3. Compactness: Binary representation is relatively compact, allowing efficient storage and transmission of information.

Challenges:

1. Human Readability: Binary code is not easily readable or writable for humans. Programming in binary is impractical due to its complexity.

2. Limited Precision: The finite number of bits used for data representation imposes limitations on precision, especially for floating-point numbers.

3. High-Level Abstraction: High-level programming languages are designed to abstract away low-level details, making it easier for humans to write and understand code. However, this abstraction requires translation to binary for execution.

Applications of Computers:

Certainly! Let’s dive deeper into the explanations for some of the key applications mentioned:

1. Business:

– Accounting: Computers automate financial calculations, ledger management, and financial reporting, reducing errors and speeding up the accounting process.

– Inventory Management: Computerized systems track inventory levels, manage stock replenishment, and provide real-time insights into product availability.

– Data Analysis: Businesses leverage computer-based data analysis tools to extract meaningful insights from large datasets, aiding strategic decision-making.

2. Education:

-Learning Management Systems (LMS): LMS platforms facilitate online education, allowing educators to create, manage, and deliver course content, assessments, and discussions.

– Research: Computers assist researchers in accessing and analyzing vast amounts of academic literature, conducting simulations, and managing experimental data.

– Simulations: Virtual simulations provide hands-on learning experiences in fields like science, engineering, and healthcare.

3. Medicine:

– Diagnosis: Computer-assisted diagnosis involves analyzing medical images, patient data, and clinical records to support healthcare professionals in making accurate diagnoses.

– Imaging: Medical imaging technologies, including MRI and CT scans, rely on computer algorithms for image reconstruction, enhancement, and analysis.

– Research: Computers aid medical researchers in processing genomic data, conducting drug discovery simulations, and modeling diseases.

4. Entertainment:

– Gaming: Computers serve as the primary platform for gaming, providing high-quality graphics, complex simulations, and interactive gameplay experiences.

– Multimedia: Content creation and consumption, such as video streaming, music production, and graphic design, heavily rely on computer technology.

– Virtual Reality (VR): VR applications, powered by computers, create immersive virtual environments for entertainment and training purposes.

5. Communication:

– Email: Computers and email services enable quick and efficient communication through electronic mail.

– Social Media: Social networking platforms, accessible through computers, connect individuals globally, facilitating communication, information sharing, and collaboration.

– Video Conferencing: Computers support real-time video communication, fostering remote collaboration and virtual meetings.

6. Science and Research:

Data Analysis: Computers analyze experimental data, conduct statistical analyses, and generate visual representations, aiding scientists in drawing conclusions from research.

Simulations: Computational simulations enable scientists to model and study complex systems, such as climate patterns, chemical reactions, and biological processes.

Modeling: Computer modeling helps scientists create virtual representations of phenomena, contributing to a deeper understanding of natural processes.

7.Manufacturing:

Automation: Computers control and coordinate manufacturing processes, optimizing efficiency, reducing manual labor, and enhancing production speed.

Control Systems: industrial control systems use computers to monitor and adjust machinery parameters, ensuring precise control over manufacturing processes.

Quality Control: Computers assist in quality control through automated inspections, data analysis, and the identification of defects or deviations from quality standards.

These applications illustrate how computers have become integral tools across diverse industries, playing a critical role in enhancing productivity, efficiency, and innovation. The continued advancement of computer technology is expected to further expand their applications and impact on various aspects of human life and industry.