Internet and Internet Services

By Paribesh Sapkota

Introduction

The Internet has emerged as a transformative force, reshaping the way individuals, businesses, and societies interact and communicate. It is a vast network of interconnected computers and devices that facilitates the exchange of information globally. This digital ecosystem has evolved over several decades, revolutionizing the way we access and share information. In this overview, we will explore the history, architecture, and various aspects of managing and connecting to the Internet.

History of Internet

The roots of the Internet can be traced back to the 1960s when the United States Department of Defense initiated the development of a robust communication network called ARPANET. The primary goal was to create a decentralized system capable of withstanding partial outages, ensuring military communication resilience. Over time, ARPANET evolved into the modern Internet, expanding globally and transitioning from a military tool to a platform for global communication, collaboration, and commerce.

The Internet Architecture

The Internet’s architecture is a complex and distributed system that allows for the seamless communication and exchange of information across the globe. Key features of the Internet architecture include:

- Decentralization: The Internet is not owned or controlled by a single entity. Instead, it is a decentralized network of networks. Various organizations, Internet Service Providers (ISPs), and entities contribute to the infrastructure, making it resilient and adaptable.

- Packet-Switching Model: The packet-switching model is fundamental to how data is transmitted over the Internet. In this model, data is broken down into smaller packets for efficient transmission. These packets can take different routes to reach their destination, where they are reassembled. This method allows for optimal use of network resources and ensures that data can flow even if certain paths are unavailable.

- Interconnected Networks: The Internet is a vast interconnection of numerous networks worldwide. These networks can range from small local networks to large global networks operated by major telecommunication companies. The interconnectivity allows for the global reach of the Internet.

- Internet Protocol (IP): The Internet Protocol (IP) is a set of rules that govern how data is sent and received over the Internet. IP addresses uniquely identify devices on the network, allowing for the routing of data packets to their intended destinations. The two main versions of IP in use today are IPv4 and IPv6.

- Transmission Control Protocol (TCP) and User Datagram Protocol (UDP): These are protocols that work in conjunction with IP to ensure reliable and efficient communication. TCP provides reliable, connection-oriented communication, while UDP is used for faster, connectionless communication.

- Routing: Internet routers play a crucial role in determining the most efficient paths for data packets to travel from the source to the destination. Routing protocols are employed to manage the flow of data across multiple networks.

- Redundancy: The decentralized nature of the Internet inherently provides redundancy. If one path or network fails, data packets can find alternative routes to reach their destination. This redundancy enhances the reliability and fault tolerance of the Internet.

- Reliability and Scalability: The Internet’s architecture is designed to be reliable and scalable. As the number of connected devices and the volume of data traffic increase, the infrastructure can adapt to accommodate these changes. This scalability is crucial for supporting the ever-growing demands of users and applications.

- Protocols and Standards: The Internet relies on a set of protocols and standards to ensure interoperability among different devices and networks. Protocols such as HTTP, FTP, and DNS define how data is transmitted and accessed.

Managing the Internet

Managing the Internet involves the coordination of various aspects, including the development of standards, allocation of resources, and addressing governance challenges. Several organizations contribute to this collaborative effort, each with specific roles and responsibilities. Two key organizations at the forefront of Internet management are the Internet Corporation for Assigned Names and Numbers (ICANN) and the Internet Engineering Task Force (IETF).

Internet Corporation for Assigned Names and Numbers (ICANN):

- Domain Name System (DNS) Management: ICANN is responsible for overseeing the global DNS, which translates human-readable domain names into IP addresses. It manages the allocation of domain names and IP addresses to ensure uniqueness and prevent conflicts.

- Generic Top-Level Domain (gTLD) Administration: ICANN manages the assignment and operation of generic top-level domains (e.g., .com, .org, .net). It has played a crucial role in the expansion of gTLDs to increase domain name options and address the growing demands of the Internet.

- IP Address Allocation: ICANN, in collaboration with the five Regional Internet Registries (RIRs), is involved in the allocation and assignment of IP address blocks globally. This ensures that IP addresses are distributed efficiently and according to established policies.

- Coordination of Root Server System: ICANN coordinates the operation of the root server system, which is a critical component of the DNS. The root servers maintain the authoritative list of top-level domain names and their corresponding IP addresses.

- Policy Development: ICANN facilitates the development of policies related to domain names, IP addresses, and other aspects of Internet governance. It engages with the global Internet community, including stakeholders such as governments, businesses, and technical experts, to establish consensus-driven policies.

Internet Engineering Task Force (IETF):

- Standardization of Protocols: The IETF is responsible for developing and standardizing the protocols that form the foundation of the Internet. Working groups within the IETF focus on specific areas, such as the development of protocols for communication, security, and network management.

- Request for Comments (RFCs): The IETF publishes documents known as Request for Comments (RFCs), which document specifications, procedures, and innovations related to Internet standards. RFCs serve as a reference for the implementation and operation of Internet protocols.

- Open and Collaborative Development: The IETF operates on a principle of open participation and collaboration. It encourages contributions from individuals, researchers, engineers, and vendors worldwide to ensure diverse perspectives and expertise in the development of Internet standards.

- Working Groups: The IETF organizes working groups to focus on specific technical challenges or areas of interest. These working groups engage in discussions, research, and development to address evolving needs in Internet technology.

Connecting to the Internet

Connecting to the Internet is a fundamental step in accessing the vast array of online resources and services. The process involves various technologies and infrastructure, and Internet Service Providers (ISPs) play a crucial role in facilitating these connections. Here are some common methods of connecting to the Internet:

Technologies for Connecting to the Internet:

- Broadband:

- Overview: Broadband refers to high-speed internet connections that offer faster data transfer rates compared to traditional dial-up connections.

- Types: Various technologies fall under the broadband category, including Digital Subscriber Line (DSL), cable, fiber-optic, and satellite.

- DSL (Digital Subscriber Line):

- Overview: DSL uses existing telephone lines to provide high-speed internet access. It allows users to connect to the internet and make voice calls simultaneously.

- Advantages: DSL offers faster speeds than dial-up, and the connection is always on.

- Cable Internet:

- Overview: Cable internet utilizes the same coaxial cables that deliver cable television signals. It provides high-speed internet access and is widely available in urban and suburban areas.

- Advantages: Cable internet is known for its fast speeds and consistent performance.

- Fiber-Optic:

- Overview: Fiber-optic internet uses thin strands of glass or plastic to transmit data using light signals. It offers extremely high-speed internet access and is considered one of the fastest technologies.

- Advantages: Fiber-optic connections provide symmetrical speeds for both uploading and downloading, and they are less susceptible to interference.

- Satellite Internet:

- Overview: Satellite internet involves communication with satellites in orbit around the Earth. It is particularly useful in rural or remote areas where other types of high-speed connections may be unavailable.

- Advantages: Satellite internet provides coverage in areas where other broadband options are limited.

Internet Service Providers (ISPs):

- Role of ISPs:

- ISPs are companies that provide individuals and businesses with access to the Internet. They manage the necessary infrastructure, such as servers, routers, and data centers, to enable internet connectivity.

- Wired and Wireless Connections:

- ISPs offer both wired and wireless connections. Wired connections include technologies like DSL, cable, and fiber-optic, while wireless options include technologies like Wi-Fi and mobile networks.

- Subscription Plans:

- ISPs typically offer various subscription plans with different speeds and pricing tiers. Users can choose a plan based on their internet usage requirements and budget.

- Installation and Equipment:

- ISPs handle the installation of necessary equipment, such as modems and routers, to establish the internet connection at the user’s location.

- Technical Support:

- ISPs provide technical support to address issues related to connectivity, equipment, and other internet-related services. This support may include troubleshooting, customer service, and assistance with service upgrades.

Internet Connections

Indeed, the evolution of internet connections has seen a significant shift from slower, traditional methods to high-speed and wireless technologies. Here’s an overview of various types of internet connections:

Dial-Up (Historical):

- Overview: Dial-up was one of the earliest methods for connecting to the internet. It utilized a standard telephone line to establish a connection, with users requiring a modem and a telephone connection.

- Speed: Dial-up connections were relatively slow, typically offering speeds of up to 56 Kbps.

- Reliability: They were prone to disruptions, and users had to contend with tying up their phone lines while connected to the internet.

- Status: Dial-up is now considered obsolete in many regions due to its slow speeds and limitations.

Broadband:

- Overview: Broadband connections offer high-speed internet access and have largely replaced dial-up. They include various technologies such as DSL, cable, and fiber-optic.

- DSL (Digital Subscriber Line): Uses existing telephone lines.

- Cable: Utilizes coaxial cables, often the same lines used for cable television.

- Fiber-Optic: Employs thin strands of glass or plastic to transmit data using light signals, providing high-speed and reliable connectivity.

Wireless Internet Connections:

- Wi-Fi:

- Overview: Wi-Fi, or Wireless Fidelity, enables wireless connectivity within a limited range. It’s commonly used for local area network (LAN) and in-home connectivity.

- Speed: Wi-Fi speeds can vary but have significantly improved over time.

- Reliability: Affected by factors like signal interference and distance from the router.

- 4G and 5G Mobile Networks:

- 4G (Fourth Generation): Offers high-speed mobile internet access, facilitating activities like video streaming and online gaming on mobile devices.

- 5G (Fifth Generation): Represents the latest standard, providing even faster speeds, lower latency, and increased capacity. It supports emerging technologies like the Internet of Things (IoT) and augmented reality.

- Satellite Internet:

- Overview: Satellite internet involves communication with satellites in orbit, making it particularly useful in remote or rural areas.

- Speed: While improved, satellite internet may have higher latency compared to other broadband options.

- Reliability: Provides coverage in areas where traditional broadband infrastructure is limited.

Factors Influencing Choice:

- Speed Requirements:

- Users with high data demands, such as those streaming HD videos or engaging in online gaming, typically opt for high-speed broadband or 5G.

- Reliability:

- Consideration of how consistently the connection performs, with broadband and fiber-optic generally offering more reliability than older technologies.

- Geographical Location:

- Rural areas might have limited access to cable or fiber-optic services, making satellite or mobile connections more viable.

- Cost:

- Budget considerations often influence the choice of internet connection, with higher speeds and reliability usually associated with higher costs.

- Availability:

- The infrastructure available in a specific location impacts the options available to users. Urban areas often have a wider range of choices compared to rural areas.

IP Address:

An IP address is the identifier that enables your device to send or receive data packets across the internet. It holds information related to your location and therefore making devices available for two-way communication. The internet requires a process to distinguish between different networks, routers, and websites. Therefore, IP addresses provide the mechanism of doing so, and it forms an indispensable part in the working of the internet. You will notice that most of the IP addresses are essentially numerical. Still, as the world is witnessing a colossal growth of network users, the network developers had to add letters and some addresses as internet usage grows.

An IP address is represented by a series of numbers segregated by periods(.). They are expressed in the form of four pairs – an example address might be 255.255.255.255 wherein each set can range from 0 to 255.

IP addresses are not produced randomly. They are generated mathematically and are further assigned by the IANA (Internet Assigned Numbers Authority), a department of the ICANN.

How do IP addresses work?

Sometimes your device doesn’t connect to your network the way you expect it to be, or you wish to troubleshoot why your network is not operating correctly. To answer the above questions, it is vital to learn the process with which IP addresses work.

Internet Protocol or IP runs the same manner as other languages, i.e., applying the set guidelines to communicate the information. All devices obtain, send, and pass information with other associated devices with the help of this protocol only. By using the same language, the computers placed anywhere can communicate with one another.

Types of IP addresses

There are various classifications of IP addresses, and each category further contains some types.

Consumer IP addresses

Every individual or firm with an active internet service system pursues two types of IP addresses, i.e., Private IP (Internet Protocol) addresses and public IP (Internet Protocol) addresses. The public and private correlate to the network area. Therefore, a private IP address is practiced inside a network, whereas the other (public IP address) is practiced outside a network.

- Private IP addresses: Every device connected to your network has a unique private IP address assigned to it. It can accommodate laptops, desktops, tablets, cellphones, and even Wi-Fi-capable devices like smart TVs, printers, and speakers. The need for private IP addresses at individual residences appears to be rising along with the internet of things’ rise. But the router needs a way to recognize these objects clearly. As a result, any device utilizing your internet network is uniquely identified by the private IP address that your router generates. Consequently, they set themselves apart from each other on the network.

- Public IP addresses: The whole network of connected devices is represented by a public IP address, often known as a main address. Each device that is part of your primary address has a unique private IP address. Your router’s public IP address is provided by your ISP. The bulk supply of IP addresses that ISPs distribute to their customers is often held by them. Every device that is located outside of your internet network uses your public IP address to identify your network. Public IP addresses are further classified into two categories- dynamic and static.

- Dynamic IP addresses

As the name suggests, Dynamic IP addresses change automatically and frequently. With this types of IP address, ISPs already purchase a bulk stock of IP addresses and allocate them in some order to their customers. Periodically, they re-allocate the IP addresses and place the used ones back into the IP addresses pool so they can be used later for another client. The foundation for this method is to make cost savings profits for the ISP. - Static IP addresses

In comparison to dynamic IP addresses, static addresses are constant in nature. The network assigns the IP address to the device only once and, it remains consistent. Though most firms or individuals do not prefer to have a static IP address, it is essential to have a static IP address for an organization that wants to host its network server. It protects websites and email addresses linked with it with a constant IP address.

Types of website IP addresses

1. Shared IP addresses

Many startups or individual website makers or various SME websites who don’t want to invest initially in dedicated IP addresses can opt for shared hosting plans. Various web hosting providers are there in the market providing shared hosting services where two or more websites are hosted on the same server. Shared hosting is only feasible for websites that receive average traffic, the volumes are manageable, and the websites themselves are confined in terms of the webpages, etc.

2. Dedicated IP addresses

Web hosting providers also provide the option to acquire a dedicated IP address. Undoubtedly dedicated IP addresses are more secure, and they permit the users to run their File Transfer Protocol (FTP) server. Therefore, it is easier to share and transfer data with many people within a business, and it also provides the option of anonymous FTP sharing. Another advantage of a dedicated IP addresses it the user can easily access the website using the IP address rather than typing the full domain name.

Domain Name System (DNS):

The Internet’s phone book is the Domain Name System (DNS). Through domain names like nytimes.com or espn.com, humans may access information on the internet. Through Internet Protocol (IP) addresses, web browsers may communicate. In order for browsers to load Internet resources, DNS converts domain names to IP addresses. Each device connected to the Internet has a unique IP address which other machines use to find the device. DNS servers eliminate the need for humans to memorize IP addresses such as 192.168.1.1 (in IPv4), or more complex newer alphanumeric IP addresses such as 2400:cb00:2048:1::c629:d7a2 (in IPv6).

How does DNS work?

The process of DNS resolution involves converting a hostname (such as www.example.com) into a computer-friendly IP address (such as 192.168.1.1). An IP address is given to each device on the Internet, and that address is necessary to find the appropriate Internet device – like a street address is used to find a particular home. When a user wants to load a webpage, a translation must occur between what a user types into their web browser (example.com) and the machine-friendly address necessary to locate the example.com webpage.

In order to understand the process behind the DNS resolution, it’s important to learn about the different hardware components a DNS query must pass between. For the web browser, the DNS lookup occurs “behind the scenes” and requires no interaction from the user’s computer apart from the initial request.

DNS server types

There are several server types involved in completing a DNS resolution. The following list describes the four name servers in the order a query passes through them. They provide the domain name being sought or referrals to other name servers.

- Recursive server. The recursive server takes DNS queries from an application, such as a web browser. It’s the first resource the user accesses and either provides the answer to the query if it has it cached or accesses the next-level server if it doesn’t. This server may go through several iterations of querying before returning an answer to the client.

- Root name server. This server is the first place the recursive server sends a query if it doesn’t have the answer cached. The root name server is an index of all the servers that will have the information being queried. These servers are overseen by the Internet Corporation for Assigned Names and Numbers, specifically a branch of ICANN called the Internet Assigned Numbers Authority.

- TLD server. The root server directs the query based on the top-level domain — the .com, .edu or .org in the URL. This is a more specific part of the lookup.

- Authoritative name server. The authoritative name server is the final checkpoint for the DNS query. These servers know everything about a given domain and deal with the subdomain part of the domain name. These servers contain DNS resource records with specific information about a domain, such as the A record. They return the necessary record to the recursive server to send back to the client and cache it closer to the client for future lookups.

DNS caching

The goal of DNS caching is to reduce the time it takes to get an answer to a DNS query. Caching enables DNS to store previous answers to queries closer to clients and get that same information to them faster the next time it is queried.

DNS data can be cached in a number of places. Some common ones include the following:

- Browser. Most browsers, like Apple Safari, Google Chrome and Mozilla Firefox, cache DNS data by default for a set amount of time. The browser is the first cache that gets checked when a DNS request gets made, before the request leaves the machine for a local DNS resolver server.

- Operating system (OS). Many OSes have built-in DNS resolvers called stub resolvers that cache DNS data and handle queries before they are sent to an external server. The OS is usually queried after the browser or other querying application.

- Recursive resolver. The answer to a DNS query can also be cached on the DNS recursive resolver. Resolvers may have some of the records necessary to return a response and be able to skip some steps in the DNS resolution process. For example, if the resolver has A records but not NS records, the resolver can skip the root server and query the TLD server directly.

DNS security

DNS does have a few vulnerabilities that have been discovered over time. DNS cache poisoning is one such vulnerability. In DNS cache poisoning, data is distributed to caching resolvers, posing as an authoritative origin server. The data can then present false information and can affect TTL. Actual application requests can also be redirected to a malicious host network. An individual with malicious intent can create a dangerous website with a misleading title and try to convince users that the website is real, giving the hacker access to the user’s information. By replacing a character in a domain name with a similar looking character — such as replacing the number 1 with the letter l, which may look similar — a user could be fooled into selecting a false link. This is commonly exploited with phishing attacks.

Client-Server Architecture:

Client-server architecture is a computing model that divides the functionality of a software application into two major components: clients and servers. These components communicate over a network to perform tasks and share resources. This architectural model is widely used in various computing systems, from simple web applications to complex enterprise-level systems.

Key Components:

- Client:

- A client is a device or application that requests services or resources from a server. Clients initiate communication by sending requests to servers and await responses. Clients can be desktop computers, laptops, mobile devices, or software applications.

- Server:

- A server is a powerful computer or software application responsible for managing and providing services or resources to clients. Servers respond to client requests, process data, and manage shared resources. Examples include web servers, database servers, and file servers.

Characteristics and Features:

- Communication:

- Clients and servers communicate over a network using protocols such as HTTP (Hypertext Transfer Protocol), TCP/IP (Transmission Control Protocol/Internet Protocol), or other application-specific protocols.

- Request-Response Model:

- The interaction between clients and servers follows a request-response model. Clients send requests for specific services or resources, and servers respond with the requested information or perform the requested actions.

- Centralized Processing:

- Servers handle centralized processing tasks, managing data, computations, and business logic. Clients are responsible for presenting information to users and handling user interactions.

- Scalability:

- The client-server architecture is scalable, allowing organizations to scale their computing resources by adding or upgrading servers to meet increased demand. This scalability is crucial for handling growing user bases and workloads.

- Resource Sharing:

- Servers centralize resources such as databases, files, or application logic. This centralized approach facilitates efficient resource management and ensures data consistency across multiple clients.

- Security:

- Security measures can be implemented on both the client and server sides. Servers often have robust security measures to protect data and resources, and clients may include security features to secure user interactions and local data.

- Independence:

- Clients and servers operate independently, allowing for flexibility and modular design. Clients can be developed for various platforms, and server implementations can be changed or upgraded without affecting clients as long as the communication protocols remain consistent.

Examples of Client-Server Systems:

- Web Applications:

- Websites and web applications typically follow a client-server architecture. The web browser acts as the client, making requests to a web server that delivers web pages and content.

- Database Management Systems (DBMS):

- Database servers provide data storage and retrieval services to client applications. Clients, such as desktop applications or web servers, interact with the database server to perform database operations.

- Email Systems:

- Email clients (e.g., Outlook, Thunderbird) interact with email servers (e.g., Exchange, IMAP/SMTP servers) to send, receive, and store email messages.

- File Sharing Systems:

- File servers manage shared files and folders, serving them to clients that need access. Clients, in turn, request files or upload data to the file server.

- Network Printing:

- Print servers manage print jobs and deliver them to networked printers. Clients send print requests to the print server.

Hyper Text Transfer Protocol (HTTP):

HTTP, or Hypertext Transfer Protocol, is the foundation of data communication on the World Wide Web. It is an application layer protocol used for transmitting and receiving information across the internet. HTTP facilitates the communication between web clients (such as web browsers) and servers hosting web content. The protocol defines how messages are formatted and transmitted and how web servers and browsers should respond to various commands.

Key Characteristics of HTTP:

- Stateless Protocol:

- HTTP is a stateless protocol, meaning each request from a client to a server is treated independently. The server does not retain information about the previous requests, ensuring simplicity and scalability.

- Request-Response Model:

- Communication in HTTP follows a request-response model. A client (typically a web browser) sends an HTTP request to a server, requesting a specific action or resource. The server then responds to the request with the necessary data or an acknowledgment.

- Uniform Resource Identifier (URI):

- HTTP uses Uniform Resource Identifiers (URIs) to identify resources on the web. URIs include URLs (Uniform Resource Locators) and URNs (Uniform Resource Names).

- Methods (Verbs):

- HTTP defines various methods (also known as verbs) that indicate the action the client wants the server to perform. Common methods include:

- GET: Retrieve a resource.

- POST: Submit data to be processed.

- PUT: Update a resource.

- DELETE: Remove a resource.

- HTTP defines various methods (also known as verbs) that indicate the action the client wants the server to perform. Common methods include:

- Headers:

- HTTP messages contain headers that provide additional information about the request or response. Headers include details such as content type, content length, date, and more.

- Status Codes:

- HTTP responses include status codes that indicate the outcome of the request. Common status codes include 200 (OK), 404 (Not Found), 500 (Internal Server Error), etc.

- Connectionless and Stateless:

- Each HTTP request is an independent transaction, and the protocol is connectionless, meaning that after a request is made and a response is provided, the connection between the client and server is closed. This stateless nature simplifies implementation and enhances scalability.

- Text-based Protocol:

- HTTP is a text-based protocol, and its messages are human-readable. This characteristic facilitates debugging and understanding of the communication between clients and servers.

Secure Version – HTTPS:

For secure transactions, the Hypertext Transfer Protocol Secure (HTTPS) is used. HTTPS is an extension of HTTP with added security measures provided by SSL/TLS encryption. This ensures that data exchanged between the client and server remains confidential and secure, preventing unauthorized access or tampering.

How HTTP Works:

- Request:

- A client sends an HTTP request to a server, specifying the method (GET, POST, etc.) and the URI of the resource it wants.

- Processing:

- The server processes the request, performs the required action, and generates an HTTP response.

- Response:

- The server sends the HTTP response back to the client, which contains the requested information or indicates the status of the operation.

- Rendering:

- The client (web browser) renders the received content or performs the necessary actions based on the response.

Electronic Mail (Email):

Electronic mail, commonly known as email, is a method of exchanging digital messages between people using electronic devices such as computers, smartphones, and tablets. Email has become a ubiquitous and essential communication tool in both personal and professional settings.

Key Components of Email:

- Email Address:

- An email address uniquely identifies a user and is composed of a local part (username) followed by the “@” symbol and the domain name. For example, “username@example.com.”

- Mail Server:

- Email is stored, sent, and received through email servers. Incoming emails are stored on the recipient’s mail server until retrieved by the recipient.

- Mail Client:

- A mail client, also known as an email client or email application, is software used to access and manage email. Examples include Outlook, Thunderbird, Apple Mail, and various web-based email clients.

- Protocols:

- Email communication relies on standardized protocols such as SMTP (Simple Mail Transfer Protocol) for sending emails, IMAP (Internet Message Access Protocol) for retrieving emails from a mail server, and POP3 (Post Office Protocol version 3) for a similar purpose.

Basic Process of Email Communication:

- Compose:

- The sender creates a new email message using their email client, specifying the recipient’s email address, subject, and message content.

- Send:

- The sender clicks the “send” button, and the email client uses the SMTP protocol to send the message to the sender’s outgoing mail server.

- Routing:

- The sender’s mail server routes the email through the internet, using DNS (Domain Name System) to find the recipient’s mail server.

- Delivery:

- The recipient’s mail server receives the email and stores it until the recipient retrieves it using their email client.

- Retrieve:

- The recipient uses their email client, configured with either IMAP or POP3, to retrieve and view the email from their mail server.

- Read and Reply:

- The recipient reads the email, and if needed, replies or takes other actions. The response follows a similar process, creating a continuous communication loop.

Email Features and Functions:

- Attachments:

- Users can attach files, documents, images, or other media to their emails, facilitating the sharing of information.

- Folders and Labels:

- Email clients often provide organizational features such as folders or labels to help users categorize and manage their emails.

- Search Functionality:

- Robust search features allow users to quickly find specific emails among their archives.

- Forwarding and CC/BCC:

- Users can forward emails to others, and the CC (Carbon Copy) and BCC (Blind Carbon Copy) features enable sending copies to multiple recipients.

- Spam Filtering:

- Email services often include spam filters to automatically detect and filter out unwanted or malicious emails.

- Signatures:

- Users can set up email signatures, providing a personalized closing statement with contact information.

- Encryption and Security:

- Security measures such as email encryption enhance the privacy and confidentiality of email communications.

Business and Professional Use:

Email is extensively used in business and professional settings for communication, collaboration, and information sharing. It serves as a formal means of communication for various purposes, including:

- Internal Communication: Within organizations for team collaboration and announcements.

- External Communication: With clients, customers, and business partners.

- Document Sharing: Sending attachments for proposals, reports, and other business-related documents.

- Scheduling: Coordinating meetings and appointments through email.

Challenges:

While email is a powerful communication tool, it also presents challenges such as email overload, spam, phishing, and the need for effective management strategies to maintain inbox organization and security.

File Transfer Protocol (FTP):

File Transfer Protocol (FTP) is a standard network protocol used to transfer files between a client and a server on a computer network. FTP operates on a client-server model where the client initiates a connection to the server to perform file transfer operations.

Key Components and Features:

- Client and Server:

- Client: The user’s device or software application that initiates the FTP connection.

- Server: The computer or server hosting the FTP service, storing files that clients can access and transfer.

- Control Connection and Data Connection:

- FTP establishes two separate connections during file transfers:

- Control Connection: Handles commands and responses between the client and server. It is used to send commands like login credentials, change directories, and initiate file transfers.

- Data Connection: Actual data transfer occurs over a separate connection. The data connection can be established in two modes: active mode (the server initiates the data connection) or passive mode (the client initiates the data connection).

- FTP establishes two separate connections during file transfers:

- FTP Commands:

- FTP uses a set of commands to perform various operations. Common commands include:

- USER/PASS: Authenticate the user with a username and password.

- CWD (Change Working Directory): Change the current directory on the server.

- LIST/NLST: List files and directories in the current directory.

- RETR (Retrieve): Download a file from the server.

- STOR (Store): Upload a file to the server.

- DELE (Delete): Delete a file on the server.

- FTP uses a set of commands to perform various operations. Common commands include:

- Anonymous FTP:

- Some FTP servers allow anonymous access, where users can log in using the username “anonymous” and provide an email address as the password. This allows users to access public files without a dedicated account.

- FTP Modes:

- FTP supports two modes for data transfer:

- ASCII Mode: Used for transferring text files, ensuring proper line endings based on the operating system.

- Binary Mode: Used for transferring binary files without modification.

- FTP supports two modes for data transfer:

- FTP Over Secure Connections:

- FTP can operate over a secure connection using protocols like FTPS (FTP Secure) or SFTP (SSH File Transfer Protocol). These provide encryption and authentication for secure file transfers.

- Firewall Considerations:

- FTP may encounter issues with firewalls due to its use of separate control and data connections. Passive mode is often used to address firewall challenges.

FTP Workflow:

- Connection Establishment:

- The client establishes a control connection to the FTP server on the default port 21.

- Authentication:

- The client provides authentication credentials (username and password) to log in to the server.

- Command Execution:

- The client sends FTP commands over the control connection to perform various operations like navigating directories or initiating file transfers.

- Data Transfer:

- For file transfers, a separate data connection is established in either active or passive mode, depending on the configuration.

- Response Handling:

- The server responds to client commands and provides status codes to indicate the success or failure of operations.

- Connection Termination:

- Once the file transfer or operation is complete, the control and data connections are closed.

Uses of FTP:

- Website Maintenance:

- FTP is often used to upload and update files on web servers, allowing website administrators to manage content.

- File Sharing:

- FTP facilitates the sharing of files between users on a network or over the internet.

- Software Distribution:

- Software developers use FTP to distribute software packages and updates.

- Backup and Archiving:

- FTP can be used for transferring files for backup purposes or archiving data.

- Remote File Management:

- Administrators use FTP to remotely manage files on servers, including configuration files and log files.

World Wide Web (WWW):

World Wide Web, which is also known as a Web, is a collection of websites or web pages stored in web servers and connected to local computers through the internet. These websites contain text pages, digital images, audios, videos, etc. Users can access the content of these sites from any part of the world over the internet using their devices such as computers, laptops, cell phones, etc. The WWW, along with internet, enables the retrieval and display of text and media to your device.

The building blocks of the Web are web pages which are formatted in HTML and connected by links called “hypertext” or hyperlinks and accessed by HTTP. These links are electronic connections that link related pieces of information so that users can access the desired information quickly. Hypertext offers the advantage to select a word or phrase from text and thus to access other pages that provide additional information related to that word or phrase.

A web page is given an online address called a Uniform Resource Locator (URL). A particular collection of web pages that belong to a specific URL is called a website, e.g., www.facebook.com, www.google.com, etc. So, the World Wide Web is like a huge electronic book whose pages are stored on multiple servers across the world.

How the World Wide Web Works?

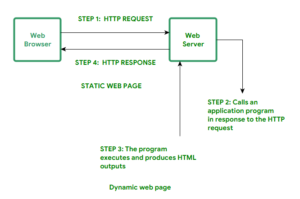

Now, we have understood that WWW is a collection of websites connected to the internet so that people can search and share information. Now, let us understand how it works!The Web works as per the internet’s basic client-server format as shown in the following image. The servers store and transfer web pages or information to user’s computers on the network when requested by the users. A web server is a software program which serves the web pages requested by web users using a browser. The computer of a user who requests documents from a server is known as a client. Browser, which is installed on the user’ computer, allows users to view the retrieved documents. All the websites are stored in web servers. Just as someone lives on rent in a house, a website occupies a space in a server and remains stored in it. The server hosts the website whenever a user requests its WebPages, and the website owner has to pay the hosting price for the same. The moment you open the browser and type a URL in the address bar or search something on Google, the WWW starts working. There are three main technologies involved in transferring information (web pages) from servers to clients (computers of users). These technologies include Hypertext Markup Language (HTML), Hypertext Transfer Protocol (HTTP) and Web browsers.

Key Concepts and Components:

- Hypertext and Hyperlinks:

- Hypertext is text that contains links to other text. Hyperlinks, or simply links, are clickable elements that connect one document or webpage to another. This interconnected structure allows users to navigate between different resources on the web.

- Webpages:

- A webpage is a document or resource that is part of the World Wide Web and is typically written in HTML (Hypertext Markup Language). Webpages can contain various media elements, including text, images, videos, and interactive features.

- URL (Uniform Resource Locator):

- A URL is a web address that specifies the location of a resource on the web. It consists of a protocol (e.g., http or https), domain name, and path to the resource. For example, “https://www.example.com/homepage.”

- Web Browsers:

- Web browsers are software applications that allow users to access and view webpages. Examples include Google Chrome, Mozilla Firefox, Microsoft Edge, and Safari. Browsers interpret HTML and other web technologies to render content.

- Web Servers:

- Web servers store and serve webpages to users upon request. They respond to requests from web browsers, delivering the requested content over the internet. Apache, Nginx, and Microsoft Internet Information Services (IIS) are common web server software.

- Web Standards:

- Web standards, such as HTML, CSS (Cascading Style Sheets), and JavaScript, ensure consistency and interoperability across different web browsers. These standards are maintained by organizations like the World Wide Web Consortium (W3C).

- Search Engines:

- Search engines (e.g., Google, Bing) index and organize content on the web, making it easier for users to find information. Users can enter keywords, and the search engine returns relevant webpages.

- Web Development Technologies:

- Technologies like AJAX (Asynchronous JavaScript and XML), PHP (Hypertext Preprocessor), and others enable the creation of dynamic and interactive webpages. Content management systems (CMS) like WordPress simplify website development and management.

Remote Login (TELNET):

TELNET, short for “TELetype NETwork,” is a network protocol that allows users to access and manage remote systems over a network, typically the internet. TELNET enables a user to log in to a remote computer, execute commands, and interact with its resources as if they were physically present at the remote location. The protocol is text-based and predates more secure alternatives like SSH (Secure Shell).

Key Features of TELNET:

- Text-Based Communication:

- TELNET is a text-based protocol, meaning that all communication between the local and remote systems occurs through plain text characters. This includes the user’s commands and the responses from the remote system.

- Port 23:

- TELNET typically operates on port 23. When connecting to a remote system via TELNET, the user’s TELNET client establishes a connection to the TELNET server on the remote system using this port.

- Interactive Sessions:

- TELNET provides an interactive session between the local and remote systems. Users can type commands on their local system, and these commands are sent to the remote system for execution. The results or responses are then sent back to the local system for display.

- Authentication:

- TELNET supports simple authentication mechanisms, often relying on a username and password for access to the remote system. However, this authentication process is not encrypted, making it vulnerable to security risks such as password sniffing.

TELNET Workflow:

- Connection Establishment:

- The user initiates a TELNET connection by specifying the TELNET command followed by the IP address or domain name of the remote system. For example:

telnet remote.example.comortelnet 192.168.1.1.

- The user initiates a TELNET connection by specifying the TELNET command followed by the IP address or domain name of the remote system. For example:

- Authentication:

- The user provides their login credentials (username and password) to authenticate with the remote system.

- Interactive Session:

- Once authenticated, the user enters commands on their local system. These commands are transmitted to the remote system over the TELNET connection. The remote system executes the commands and sends the results back to the local system.

- Connection Termination:

- The user can terminate the TELNET session by entering the appropriate command or simply closing the TELNET client. This closes the connection to the remote system.

Security Concerns:

TELNET has several security vulnerabilities, primarily because it transmits data, including login credentials, in plain text. This lack of encryption exposes sensitive information to potential interception by malicious entities on the network. As a result, TELNET is considered insecure for use over untrusted networks, and its usage has significantly diminished in favor of more secure alternatives like SSH.

SSH as a Secure Alternative:

Secure Shell (SSH) has largely replaced TELNET for remote access due to its enhanced security features. Unlike TELNET, SSH encrypts the entire communication session, providing confidentiality and protecting against various security threats. SSH operates on port 22 by default and supports secure remote login, file transfer, and other secure network services.

Static Web pages: Static Web pages are very simple. It is written in languages such as HTML, JavaScript, CSS, etc. For static web pages when a server receives a request for a web page, then the server sends the response to the client without doing any additional process. And these web pages are seen through a web browser. In static web pages, Pages will remain the same until someone changes it manually.

Characteristics:

- Static pages do not change based on user input or interactions.

- Every user sees the same content.

- Content updates require manual editing of HTML code by the web developer.

- Commonly used for simple websites with consistent information.

- Advantages:

- Simplicity: Static pages are straightforward and easy to create.

- Performance: Static pages load quickly since the content is already defined.

- Disadvantages:

- Lack of Interactivity: Limited interaction with users since content is fixed.

- Maintenance Challenges: Regular content updates require manual editing of HTML.

Dynamic Web Pages: Dynamic Web Pages are written in languages such as CGI, AJAX, ASP, ASP.NET, etc. In dynamic web pages, the Content of pages is different for different visitors. It takes more time to load than the static web page. Dynamic web pages are used where the information is changed frequently, for example, stock prices, weather information, etc.

Characteristics:

- Content can change based on user input, preferences, or other factors.

- Interactivity: Users can interact with dynamic elements, such as forms, that send data to the server.

- Often powered by server-side scripting languages like PHP, Python, or Node.js.

- Advantages:

- Interactivity: Dynamic pages can respond to user actions and provide personalized content.

- Easy Content Management: Content can be updated dynamically without modifying the underlying code.

- Scalability: Well-suited for websites with frequently changing content or large amounts of data.

- Disadvantages:

- Complexity: Dynamic pages require server-side scripting, which can be more complex than static HTML.

- Slower Loading: Dynamic pages may take longer to load as content is generated in real-time.

- Server Resources: Dynamic pages may place a higher demand on server resources, especially for complex interactions.

Examples:

- Static Web Page Example:

- A personal portfolio website with fixed information about the individual’s skills, projects, and contact details.

- Dynamic Web Page Example:

- An e-commerce website that displays product recommendations based on a user’s browsing history or preferences. The content is generated dynamically from a database.

Search Engines:

Search engines are specialized software systems designed to retrieve information from the vast and diverse content available on the World Wide Web. These engines help users find relevant information, web pages, images, videos, and other resources based on their queries. Search engines play a crucial role in making the internet accessible and navigable, enabling users to discover and access content efficiently.

Key Components and Functions of Search Engines:

- Crawling:

- Search engines use automated programs known as spiders or bots to crawl the web. These bots systematically visit web pages, follow links, and index the content they find.

- Indexing:

- The crawled information is organized and stored in a massive database known as an index. This index allows search engines to retrieve relevant results quickly when users enter search queries.

- Ranking Algorithm:

- Search engines employ complex algorithms to determine the relevance and importance of web pages based on the search query. Factors such as keywords, content quality, user engagement, and external links contribute to the ranking of pages.

- User Query Processing:

- When a user enters a search query, the search engine processes the query through its algorithm and retrieves the most relevant results from its index.

- Results Display:

- Search engine results pages (SERPs) display a list of web pages deemed most relevant to the user’s query. Results may include web pages, images, videos, news articles, and other types of content.

- Page Ranking:

- Pages are often ranked in order of relevance, with the most relevant appearing at the top. Users typically focus on the top results, and search engines aim to provide the most valuable and accurate information first.

Popular Search Engines:

- Google:

- Google is the most widely used search engine globally, known for its powerful algorithms, comprehensive index, and user-friendly interface.

- Bing:

- Microsoft’s Bing search engine provides an alternative to Google, offering its own set of features and search algorithms.

- Yahoo:

- Yahoo, once a prominent search engine, now relies on Bing’s search technology for its search results.

- Baidu:

- Baidu is a leading search engine in China, providing results in Chinese and prioritizing content relevant to the Chinese-speaking audience.

- Yandex:

- Yandex is a popular search engine in Russia and other Russian-speaking countries, offering search services in Russian.

Search Engine Optimization (SEO):

Search engine optimization is the practice of optimizing web pages to improve their visibility and ranking in search engine results. SEO involves techniques such as keyword optimization, creating high-quality content, improving website structure, and obtaining relevant backlinks. The goal is to enhance a website’s chances of appearing in the top results for relevant queries.

Challenges and Ethical Considerations:

- Spam and Manipulation:

- Some individuals or organizations may attempt to manipulate search engine rankings through unethical practices, such as keyword stuffing, link farms, and cloaking.

- Privacy Concerns:

- Search engines collect and store vast amounts of user data, raising privacy concerns. Users’ search histories and personal information are valuable assets for targeted advertising.

- Algorithm Changes:

- Search engines regularly update their algorithms to provide more accurate and relevant results. However, these changes can impact website rankings and visibility, leading to challenges for website owners and SEO practitioners.

- Information Accuracy:

- Search engines aim to deliver accurate information, but misinformation or biased content can sometimes appear in search results. Efforts are ongoing to address these issues and improve content quality.

E-Commerce (Electronic Commerce):

E-commerce refers to the buying and selling of goods and services over the internet or other electronic systems. It has become a significant aspect of modern business, revolutionizing the way transactions are conducted and enabling businesses to reach a global audience. E-commerce encompasses various models, technologies, and strategies to facilitate online commerce.

Key Components and Models of E-Commerce:

- Online Retail (B2C – Business-to-Consumer):

- In the B2C model, businesses sell products or services directly to consumers. Examples include online retailers, marketplaces, and individual brand websites.

- Online Marketplace:

- Marketplaces bring together multiple sellers and buyers on a single platform. Examples include Amazon, eBay, and Etsy.

- Online Payments:

- E-commerce relies on secure online payment systems to facilitate transactions. This includes credit/debit card payments, digital wallets, and other electronic payment methods.

- Digital Products and Services:

- E-commerce extends beyond physical goods to include digital products (e-books, software, music) and services (online courses, consulting).

- Business-to-Business (B2B):

- In the B2B model, businesses conduct transactions with other businesses. This includes manufacturers, wholesalers, and suppliers interacting with retailers or other businesses in the supply chain.

- Mobile Commerce (M-Commerce):

- M-commerce involves conducting e-commerce activities through mobile devices. Mobile apps and optimized websites cater to users who shop or conduct transactions using smartphones and tablets.

- Social Commerce:

- Social media platforms play a role in e-commerce, allowing businesses to sell products directly through social channels. Social commerce integrates social interactions with the shopping experience.

- Dropshipping:

- Dropshipping is a retail fulfillment method where a store doesn’t keep the products it sells in stock. Instead, when a store sells a product, it purchases the item from a third party and has it shipped directly to the customer.

Key Features and Benefits of E-Commerce:

- Global Reach:

- E-commerce breaks down geographical barriers, enabling businesses to reach a global customer base.

- 24/7 Accessibility:

- Online stores are accessible 24/7, allowing customers to shop at their convenience regardless of time zones.

- Convenience:

- Customers can browse and purchase products or services from the comfort of their homes, eliminating the need to visit physical stores.

- Personalization:

- E-commerce platforms can leverage data to offer personalized recommendations and a tailored shopping experience.

- Cost Efficiency:

- E-commerce can reduce costs associated with maintaining physical stores, allowing businesses to offer competitive prices.

- Data Analytics:

- Businesses can gather and analyze customer data to understand preferences, track trends, and optimize marketing strategies.

- Customer Reviews and Ratings:

- E-commerce platforms often feature customer reviews and ratings, providing valuable insights for potential buyers.

- Inventory Management:

- E-commerce systems can automate inventory management, helping businesses track stock levels and manage supply chains efficiently.

E-Commerce Challenges:

- Security Concerns:

- E-commerce transactions involve sensitive financial information, making security a top concern. Implementing secure payment gateways and protecting customer data is crucial.

- Competition:

- Intense competition in the online marketplace requires businesses to differentiate themselves through quality products, efficient service, and innovative strategies.

- Logistics and Shipping:

- Timely and reliable delivery is essential for customer satisfaction. Managing logistics, shipping costs, and addressing delivery challenges are common concerns.

- Technological Evolution:

- E-commerce businesses need to adapt to evolving technologies, such as mobile platforms and emerging payment methods, to stay competitive.

- Customer Trust:

- Building and maintaining customer trust is critical. Addressing concerns related to online security, privacy, and return policies contributes to trustworthiness.

Future Trends in E-Commerce:

- Augmented Reality (AR) and Virtual Reality (VR):

- AR and VR technologies enhance the online shopping experience by allowing customers to visualize products in a virtual environment before making a purchase.

- Artificial Intelligence (AI) and Chatbots:

- AI-powered chatbots provide personalized assistance, answer customer queries, and enhance customer engagement.

- Voice Commerce:

- Voice-activated devices and virtual assistants facilitate voice-based shopping, allowing customers to place orders using voice commands.

- Sustainable E-Commerce:

- The emphasis on sustainability and eco-friendly practices is influencing consumer choices. Businesses adopting sustainable practices may gain a competitive edge.

- Subscription-Based Models:

- Subscription-based e-commerce models, where customers subscribe to receive products or services regularly, are gaining popularity.

- Social Commerce Integration:

- Social media platforms are increasingly integrated with e-commerce, allowing users to discover and purchase products directly through social channels.

E-Governance:

E-Governance, or electronic governance, refers to the use of information and communication technologies (ICTs), particularly the internet, to enhance and streamline government operations, improve service delivery, and foster citizen engagement. It involves the digital transformation of government processes and services to make them more accessible, efficient, and transparent. Here are key aspects of e-governance in the context of the internet and internet services:

1. Online Service Delivery:

a. Government Websites:

- Governments create official websites to provide information, services, and resources to citizens. These websites serve as centralized platforms for accessing various government services.

b. Service Portals:

- Service portals consolidate multiple government services into a single online platform, simplifying access for citizens. Users can apply for permits, pay taxes, and access a range of services through these portals.

c. E-Forms and Digital Transactions:

- E-governance encourages the use of electronic forms for applications and transactions. Citizens can fill out forms online, submit documents electronically, and make digital payments for government services.

2. Digital Identity and Authentication:

a. E-Government Authentication Systems:

- Secure authentication systems are implemented to ensure the identity of citizens accessing online government services. This may involve the use of digital signatures, two-factor authentication, or biometric authentication.

b. National ID and E-Government Portals:

- National identification systems are integrated with e-government portals, providing a single digital identity for citizens to access various services.

3. Open Data and Transparency:

a. Open Government Data:

- Governments publish data sets and information on open data platforms, fostering transparency and allowing citizens, businesses, and researchers to access and use public data.

b. Transparency Portals:

- Transparency portals provide real-time information on government spending, budget allocations, and other financial transactions, promoting accountability and openness.

4. Communication and Engagement:

a. Social Media Platforms:

- Governments use social media platforms to communicate with citizens, share updates, and gather feedback. Social media enhances public outreach and engagement.

b. Online Consultations and Surveys:

- Governments conduct online consultations and surveys to gather public opinions on policies, projects, and initiatives, involving citizens in decision-making processes.

5. E-Procurement:

a. Online Tendering and Bidding:

- E-governance facilitates online procurement processes, allowing businesses to participate in government tenders and bids through digital platforms.

b. Vendor Registration and Management:

- Vendors can register and manage their profiles online, simplifying the procurement process for both government agencies and suppliers.

6. Cybersecurity Measures:

a. Secure Data Handling:

- Governments implement robust cybersecurity measures to protect sensitive citizen data and ensure the secure handling of information in online transactions.

b. Data Privacy Regulations:

- Governments enact data protection laws and regulations to safeguard citizen privacy and establish guidelines for the responsible use of personal information.

7. Mobile Governance (M-Governance):

a. Mobile Apps for Services:

- Governments develop mobile applications to extend services to citizens on their mobile devices, providing access to information and services on the go.

b. SMS-Based Services:

- SMS-based services deliver information, alerts, and updates to citizens who may not have smartphones or internet access, ensuring inclusivity.

A Smart City

A Smart City leverages the capabilities of the Internet and internet services to enhance the quality of life for its residents, improve efficiency in urban operations, and address various challenges related to urbanization. The concept of a Smart City involves the integration of digital technologies, data-driven insights, and connectivity to create a sustainable and intelligent urban environment. Here are key aspects related to the role of the Internet and internet services in building and managing Smart Cities:

1. Internet of Things (IoT):

- Sensor Networks: Deploying IoT sensors throughout the city to collect real-time data on various parameters such as traffic flow, air quality, waste management, energy consumption, and more.

- Smart Devices: Integration of smart devices and systems for home automation, intelligent transportation, and public services.

2. Connectivity:

- High-Speed Internet: Ensuring widespread access to high-speed internet connectivity for residents and businesses.

- 5G Technology: Implementing 5G networks to support the increased data demands of IoT devices and enable faster and more reliable communication.

3. Data Analytics and Big Data:

- Data Collection: Gathering and analyzing vast amounts of data from sensors, devices, and systems to gain insights into urban operations and trends.

- Predictive Analytics: Using predictive analytics to anticipate and address issues such as traffic congestion, energy consumption, and public safety.

4. Smart Infrastructure:

- Smart Grids: Implementing intelligent energy distribution systems to optimize energy consumption and reduce wastage.

- Smart Buildings: Using IoT and automation in building management systems for energy efficiency, security, and comfort.

- Connected Transportation: Deploying smart traffic management systems, intelligent transportation networks, and real-time tracking for public transit.

5. E-Governance and Citizen Services:

- Digital Platforms: Offering online platforms and services for citizens to access government services, pay bills, and participate in civic activities.

- Open Data Initiatives: Making government data accessible to the public, fostering transparency and encouraging the development of innovative solutions.

6. Cybersecurity:

- Security Measures: Implementing robust cybersecurity measures to protect sensitive data, IoT devices, and critical infrastructure from cyber threats.

- Privacy Protection: Ensuring the privacy of citizens’ data through policies and technologies that safeguard personal information.

7. Smart Environment and Sustainability:

- Waste Management: Using sensors to optimize waste collection routes, reduce environmental impact, and promote recycling.

- Green Initiatives: Implementing technologies to monitor and reduce environmental pollution, promote green spaces, and enhance overall sustainability.

8. Digital Healthcare:

- Telemedicine: Leveraging digital technologies to provide remote healthcare services and improve access to medical resources.

- Health Monitoring: Using IoT devices for real-time monitoring of health parameters and disease prevention.

9. Education and Innovation:

- Digital Learning: Integrating technology into education, providing digital learning platforms, and fostering innovation in schools and universities.

- Innovation Hubs: Creating spaces for innovation and entrepreneurship to thrive, attracting tech startups and fostering a culture of continuous improvement.

10. Community Engagement:

- Smart Citizen Participation: Encouraging citizen engagement through digital platforms, community forums, and crowdsourcing initiatives.

- Feedback Mechanisms: Establishing feedback mechanisms to allow residents to report issues, provide suggestions, and actively participate in the city’s development.

Censorship and Privacy Issues:

Censorship and privacy issues are complex and multifaceted challenges that arise in the context of information dissemination, communication, and the use of technology. Both censorship and privacy concerns have significant implications for individuals, societies, and the global digital landscape. Here’s an overview of these issues:

Censorship:

1. Definition:

- Censorship involves the restriction or suppression of information, ideas, or media content by authorities or other entities. It can occur at various levels, including government censorship, corporate censorship, and self-censorship.

2. Forms of Censorship:

- Government Censorship: Authorities may limit or control access to information to maintain social, political, or cultural control.

- Corporate Censorship: Platforms and content providers may restrict or remove content based on their policies, often related to community standards or legal requirements.

- Self-Censorship: Individuals or organizations may limit their own expression or content to avoid repercussions.

3. Justifications for Censorship:

- National Security: Governments may justify censorship as necessary for national security to prevent the dissemination of sensitive information.

- Public Morality: Censorship may be imposed to maintain societal values and morals.

- Political Stability: Authorities may use censorship to control information that could potentially lead to political unrest.

4. Concerns:

- Freedom of Expression: Censorship can infringe on the fundamental right to freedom of expression, limiting the diversity of ideas and opinions.

- Overreach: Censorship measures may extend beyond their intended purpose, stifling dissent and hindering democratic processes.

5. Internet Censorship:

- Geographic Restrictions: Some countries impose restrictions on internet content based on geographic location, limiting access to certain websites or services.

- Content Filtering: Governments may employ content filtering to block or restrict access to specific websites or types of content.

Privacy Issues:

1. Definition:

- Privacy issues revolve around the protection of personal information and the right of individuals to control their own data. With the growth of digital technologies, privacy concerns have become more prominent.

2. Forms of Privacy Invasion:

- Surveillance: Widespread surveillance practices, both by governments and corporations, raise concerns about the monitoring of individuals without their knowledge or consent.

- Data Collection: The extensive collection of personal data by tech companies for targeted advertising and other purposes.

- Data Breaches: Unauthorized access to databases leading to the exposure of sensitive personal information.

3. Justifications for Privacy Invasion:

- National Security: Surveillance programs are sometimes justified as necessary for national security, especially in the context of counterterrorism efforts.

- Business Interests: Companies may argue that data collection is essential for improving services, personalizing user experiences, and targeted advertising.

4. Concerns:

- Individual Autonomy: Invasion of privacy undermines individuals’ ability to control their personal information and make informed choices.

- Misuse of Information: Collected data can be misused for identity theft, fraud, or discriminatory practices.

- Chilling Effects: The fear of surveillance or data misuse may lead to self-censorship and reluctance to express opinions or explore certain topics.

5. Digital Privacy:

- Online Tracking: Websites and online services often track users’ online activities, raising concerns about the loss of anonymity.

- Smart Devices: Internet of Things (IoT) devices may capture and transmit personal data, posing privacy risks.

Addressing the Challenges:

1. Legal Protections:

- Implementation and enforcement of robust privacy laws and regulations.

- Safeguards against unwarranted government surveillance and protection of freedom of expression.

2. Technological Solutions:

- Encryption technologies to secure communications and protect data.

- Development of privacy-focused tools and services.

3. Advocacy and Awareness:

- Public awareness campaigns to educate individuals about privacy rights and risks.

- Advocacy for digital rights and privacy-centric policies.

4. Ethical Use of Technology:

- Responsible data practices by companies, including transparent data collection policies and user consent mechanisms.

- Ethical considerations in the development and deployment of surveillance technologies.