Central Processing unit

By Notes Vandar

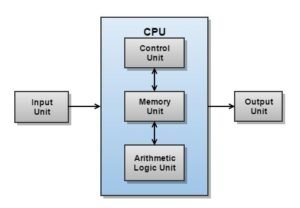

3.1 CPU Structure and Function

The Central Processing Unit (CPU), often referred to as the brain of a computer, is responsible for executing instructions and managing the operations of the entire computer system. The CPU performs all the arithmetic and logical operations, controls data flow, and coordinates the interaction between various hardware components.

Components of the CPU

The CPU is made up of several key components:

- Control Unit (CU):

- The control unit manages and coordinates the operations of the CPU and other hardware components.

- It fetches instructions from memory, decodes them, and executes them by directing the operations of the ALU, registers, and memory.

- It controls the flow of data between the CPU, memory, and input/output devices through control signals.

- Arithmetic Logic Unit (ALU):

- The ALU is responsible for performing arithmetic operations (addition, subtraction, multiplication, division) and logical operations (AND, OR, NOT, XOR).

- It takes inputs from registers, performs the required operation, and sends the results back to the registers.

- Registers:

- Registers are small, fast storage locations within the CPU used to hold data temporarily.

- Common types of registers include:

- Accumulator (ACC): Holds intermediate arithmetic and logic results.

- Program Counter (PC): Holds the address of the next instruction to be executed.

- Instruction Register (IR): Stores the current instruction being executed.

- Memory Address Register (MAR): Holds the memory location of data that needs to be accessed.

- Memory Data Register (MDR): Holds the data that is being transferred to or from memory.

- Cache:

- Cache is a small amount of high-speed memory located within the CPU.

- It stores frequently used data and instructions to reduce the time needed to access data from the main memory (RAM).

- Modern CPUs often have multiple levels of cache: L1 (smallest but fastest), L2, and sometimes L3 (largest but slower).

- Buses:

- The CPU uses several buses to communicate with other parts of the computer, including:

- Data Bus: Transfers data between the CPU, memory, and I/O devices.

- Address Bus: Carries the addresses of memory locations.

- Control Bus: Sends control signals to coordinate operations.

- The CPU uses several buses to communicate with other parts of the computer, including:

CPU Function

The CPU operates using a repetitive cycle of three key operations known as the Fetch-Decode-Execute Cycle:

- Fetch:

- The control unit fetches the next instruction from memory, the location of which is stored in the Program Counter (PC).

- The instruction is loaded into the Instruction Register (IR).

- After fetching, the program counter is updated to point to the next instruction.

- Decode:

- The control unit decodes the fetched instruction to determine what operation is to be performed.

- The instruction is typically broken down into an opcode (which specifies the operation) and operands (which specify the data or memory locations to be used).

- Execute:

- The control unit signals the appropriate part of the CPU to carry out the operation.

- For example, if the instruction is an arithmetic operation, the ALU performs the necessary calculation. If it’s a data transfer, data may be moved between registers, memory, or I/O devices.

- Store:

- The result of the execution is stored in a register or memory, and the cycle begins again.

Diagram of CPU Structure

Functions of the CPU

- Instruction Execution:

- The CPU executes instructions from programs stored in memory. It processes the data based on the program’s instructions and performs calculations or manipulates data.

- Data Movement:

- The CPU is responsible for transferring data between memory and itself or between memory and I/O devices. The control unit manages these data transfers.

- Decision Making (Branching):

- The CPU can make decisions based on the results of its operations. Conditional instructions allow the CPU to branch and execute different sequences of instructions depending on the condition’s outcome.

- Coordination:

- The CPU coordinates the activities of all the computer components. It sends control signals to other components to ensure that the overall operation of the computer is synchronized and efficient.

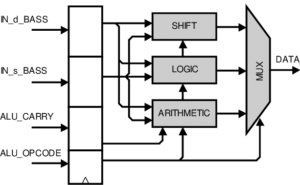

3.2 Arithmetic and logic Unit

The Arithmetic and Logic Unit (ALU) is one of the most critical components of the Central Processing Unit (CPU). It is responsible for performing all arithmetic and logical operations in a computer system. The ALU is designed to handle operations such as addition, subtraction, multiplication, division, as well as logical comparisons like AND, OR, NOT, and XOR.

Components of the ALU

- Arithmetic Unit:

- The arithmetic unit handles all the basic arithmetic operations. It can perform:

- Addition: Adds two numbers.

- Subtraction: Subtracts one number from another.

- Multiplication: Multiplies two numbers.

- Division: Divides one number by another.

- In modern processors, the arithmetic unit may also support more complex operations like floating-point arithmetic.

- The arithmetic unit handles all the basic arithmetic operations. It can perform:

- Logic Unit:

- The logic unit handles all logical operations, including:

- AND: Logical conjunction; results in true if both operands are true.

- OR: Logical disjunction; results in true if at least one operand is true.

- NOT: Logical negation; inverts the value (true becomes false, and vice versa).

- XOR: Exclusive OR; results in true if exactly one of the operands is true.

- These operations are fundamental to decision-making and branching within a program.

- The logic unit handles all logical operations, including:

- Flags:

- The ALU sets flags (special bits in a status register) based on the result of its operations. Some common flags include:

- Zero Flag (ZF): Set when the result of an operation is zero.

- Carry Flag (CF): Set when an arithmetic operation results in a carry out or borrow.

- Overflow Flag (OF): Set when the result of an arithmetic operation exceeds the size limit of the data type.

- Sign Flag (SF): Set when the result of an arithmetic operation is negative.

- Parity Flag (PF): Set if the result has an even number of 1s in its binary representation.

- The ALU sets flags (special bits in a status register) based on the result of its operations. Some common flags include:

Function of the ALU

The ALU is central to the CPU’s ability to process instructions and data. It takes inputs from the CPU’s registers and performs operations on them according to the instruction provided. Once the operation is complete, the result is usually stored back in a register, or a flag is set to indicate the result of the operation.

Operations Performed by the ALU

- Arithmetic Operations:

- Addition: Adds two numbers.

- Subtraction: Subtracts one number from another.

- Multiplication: Multiplies two numbers.

- Division: Divides one number by another.

- Increment/Decrement: Increases or decreases the value of a number by one.

- Logical Operations:

- AND: Returns true if both operands are true.

- OR: Returns true if at least one operand is true.

- NOT: Inverts the value of the operand.

- XOR: Returns true if only one of the operands is true.

- Bitwise Operations:

- Shift Left/Right: Shifts the bits of a number to the left or right, effectively multiplying or dividing by powers of two.

- Rotate Left/Right: Rotates the bits of a number around to the left or right.

- Comparison Operations:

- The ALU can compare two numbers and set flags based on the result (e.g., equal to, less than, greater than). These comparison results can be used for decision-making in branching operations (such as conditional jumps or loops).

ALU in the CPU

In modern CPUs, the ALU is tightly integrated with other components of the processor to ensure efficient operation. It works in conjunction with the Control Unit (CU), which provides the ALU with instructions on what operation to perform and on which data.

ALU Operation in a Simple CPU Cycle:

- Instruction Fetch: The Control Unit fetches the instruction from memory.

- Instruction Decode: The Control Unit decodes the instruction and identifies the operation to be performed by the ALU.

- Execution: The ALU performs the operation on the data (from registers or memory) as specified by the instruction.

- Result Storage: The result of the operation is stored back into a register or memory.

- Flag Setting: Flags are set based on the result of the operation, which may affect the flow of the program (e.g., conditional branching).

ALU Diagram

3.3 Stack

A stack is a linear data structure that follows the Last In, First Out (LIFO) principle. This means that the last element inserted into the stack is the first one to be removed. Think of a stack like a pile of plates: the last plate placed on the top of the pile is the first one taken off.

Stack Representation

A stack can be represented in two ways:

- Array-based stack: An array is used to store stack elements, and a variable (often called

top) is used to keep track of the index of the top element. - Linked-list-based stack: Each element (node) of the stack contains a reference to the next node, forming a linked list where the head node represents the top of the stack.

Stack Operations (Detailed Explanation)

- Push Operation:

- In the push operation, an element is added to the top of the stack.

- Before adding, we must check if there is space (in case of a fixed-size stack) or simply allocate space (in dynamic implementations).

- The

toppointer or index is incremented after the element is added.

Example:

plaintextInitial Stack: [3, 5, 9] (top points to 9)

After Push(7): [3, 5, 9, 7] (top points to 7)

- Pop Operation:

- In the pop operation, the top element is removed from the stack.

- Before removing, we check if the stack is not empty to avoid underflow (removing from an empty stack).

- After removing the element, the

toppointer or index is decremented.

Example:

plaintextInitial Stack: [3, 5, 9, 7] (top points to 7)

After Pop(): [3, 5, 9] (top points to 9)

- Peek (Top) Operation:

- The peek operation returns the value of the top element without removing it from the stack.

- Like the pop operation, it checks if the stack is empty before attempting to return the value.

- isEmpty Operation:

- This operation checks whether the stack is empty.

- In array-based implementations, if

top == -1, the stack is empty. In linked-list implementations, the stack is empty when the head of the list isnull.

- isFull Operation:

- For an array-based stack with fixed size, this operation checks whether the stack is full. If

top == max_size - 1, the stack is full.

- For an array-based stack with fixed size, this operation checks whether the stack is full. If

Stack Applications

- Expression Evaluation:

- Stacks are used to evaluate and convert expressions such as converting an infix expression (e.g.,

A + B) to postfix (e.g.,AB+) or prefix notation.

- Stacks are used to evaluate and convert expressions such as converting an infix expression (e.g.,

- Backtracking:

- Stacks are used in algorithms where you need to backtrack, such as maze solving or depth-first search (DFS) algorithms.

- Function Call Management:

- The call stack in programming languages is used to manage function calls, where each function call is pushed onto the stack, and after execution, it is popped off the stack.

- Undo Mechanism in Software:

- Most software applications that support an undo feature, like text editors, use stacks to keep track of the sequence of changes. Each change is pushed onto the stack, and when undo is invoked, the latest change is popped off.

Stack Representation Using an Array

Here is an example of how a stack can be implemented using an array:

#include <stdio.h>

#include <stdlib.h>

#define MAX 100 // Define the maximum size of the stack

// Stack structure definition

struct Stack {

int items[MAX];

int top;

};

// Function to initialize the stack

void initialize(struct Stack* s) {

s->top = -1;

}

// Function to check if the stack is full

int isFull(struct Stack* s) {

return s->top == MAX – 1;

}

// Function to check if the stack is empty

int isEmpty(struct Stack* s) {

return s->top == -1;

}

// Function to add an element to the stack (push)

void push(struct Stack* s, int value) {

if (isFull(s)) {

printf(“Stack Overflow! Cannot push %d onto the stack.\n”, value);

return;

}

s->items[++(s->top)] = value;

}

// Function to remove and return the top element from the stack (pop)

int pop(struct Stack* s) {

if (isEmpty(s)) {

printf(“Stack Underflow! Cannot pop from an empty stack.\n”);

exit(1);

}

return s->items[(s->top)–];

}

// Function to peek at the top element without removing it

int peek(struct Stack* s) {

if (isEmpty(s)) {

printf(“Stack is empty.\n”);

exit(1);

}

return s->items[s->top];

}

// Main function demonstrating stack operations

int main() {

struct Stack s;

initialize(&s);

push(&s, 10);

push(&s, 20);

push(&s, 30);

printf(“Top element is %d\n”, peek(&s));

printf(“Elements: \n”);

while (!isEmpty(&s)) {

printf(“%d\n”, pop(&s));

}

return 0;

}

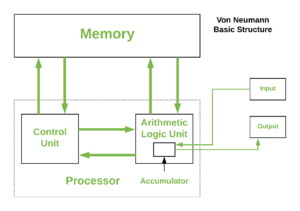

3.4 Processor organization

Processor organization refers to how the components of a Central Processing Unit (CPU) are structured and how they work together to process data and execute instructions. The processor, or CPU, is the brain of the computer and is responsible for executing programs by performing basic arithmetic, logic, control, and input/output operations.

The main components of a CPU include:

- Arithmetic and Logic Unit (ALU):

- The ALU performs all the arithmetic and logical operations, such as addition, subtraction, AND, OR, and comparisons. It receives data from registers and processes it according to instructions from the Control Unit.

- Control Unit (CU):

- The Control Unit is responsible for directing the flow of data and instructions throughout the system. It interprets instructions from programs and signals the ALU, memory, and input/output devices on how to act. It controls the execution of instructions in the correct sequence.

- Registers:

- Registers are small, high-speed storage locations within the CPU that store temporary data and instructions. They hold intermediate data, addresses, or control information while processing an instruction.

- Key registers include:

- Program Counter (PC): Holds the address of the next instruction to be executed.

- Instruction Register (IR): Holds the current instruction being executed.

- Accumulator (ACC): Used to store intermediate results of arithmetic and logic operations.

- General-purpose registers: Used for temporarily storing data or addresses.

- Cache Memory:

- Cache is a small, high-speed memory located close to the processor core. It stores frequently accessed data and instructions to speed up processing by reducing the need to access slower main memory (RAM).

- There are typically multiple levels of cache (L1, L2, L3) with L1 being the smallest and fastest, and L3 being larger but slower.

Processor Organization Types

There are different ways a processor can be organized, depending on how the ALU, CU, registers, and memory are connected and how instructions are processed. These include:

- Single Accumulator-based Organization:

- This is the simplest processor organization. The CPU uses a single register, called the accumulator, to store data temporarily during computations. All operations take place between the accumulator and memory.

- Example: Add two numbers by loading one number into the accumulator and then adding the other directly to it.

- General Register-based Organization:

- In this organization, the processor uses multiple general-purpose registers. Instead of using memory for every operation, data can be held in these registers, allowing faster access and reducing memory references.

- The CPU can perform operations directly between registers (e.g., register-to-register operations), which speeds up instruction execution.

- Stack-based Organization:

- In a stack-based organization, the CPU uses a stack to store intermediate data. The top of the stack is where operations occur, following the Last In, First Out (LIFO) principle.

- Instructions in a stack-based machine often have implicit operands (the top two elements of the stack), and intermediate results are stored back onto the stack.

Processor Organization Diagram

A simplified block diagram of processor organization can look like this:

Processor Organization Functions

- Fetching Instructions:

- The Control Unit fetches the next instruction to be executed from memory, using the Program Counter (PC) to keep track of the address of the next instruction.

- Decoding Instructions:

- The Control Unit decodes the instruction to determine what operation is to be performed. It interprets the opcode (operation code) and the operands (data or addresses) involved in the instruction.

- Executing Instructions:

- The Control Unit sends control signals to the ALU to perform the required operation (such as addition or subtraction). The operands may be fetched from registers or memory, and the result is stored back in a register or memory.

- Storing Results:

- The result of the ALU’s computation is stored either in a general-purpose register or back in main memory, depending on the instruction.

- Control Flow:

- The Control Unit updates the Program Counter to point to the next instruction and repeats the process. Branch instructions or jumps may modify the normal flow by changing the Program Counter.

Examples of Processor Organization

- Von Neumann Architecture:

- In the Von Neumann architecture, data and instructions are stored in the same memory, and a single bus is used to access both. This is the most common processor organization used in general-purpose computers.

- A limitation of this architecture is the Von Neumann bottleneck, where the single bus can slow down the system due to frequent access to memory for both instructions and data.

- Harvard Architecture:

- In the Harvard architecture, there are separate memories and buses for instructions and data. This allows for simultaneous access to both, increasing the speed of the processor.

- This architecture is commonly used in Digital Signal Processors (DSPs) and some microcontrollers.

Pipeline Organization

In modern processors, pipelining is used to improve efficiency by allowing multiple instructions to overlap during execution. A pipeline breaks the instruction cycle into multiple stages, such as fetch, decode, execute, and write-back. While one instruction is being executed, another can be fetched, and another can be decoded, increasing throughput.

- Stages of a Pipeline:

- Instruction Fetch (IF): The processor fetches the instruction from memory.

- Instruction Decode (ID): The instruction is decoded to determine the operation.

- Execution (EX): The operation is performed by the ALU or another functional unit.

- Memory Access (MEM): Data is read from or written to memory if needed.

- Write-back (WB): The result of the operation is written back to the register or memory.

3.5 Register organization

Register organization refers to how registers are used and structured within the CPU. Registers are small, high-speed storage units located directly in the processor that store data temporarily for fast access during instruction execution. They are faster than main memory (RAM) and help reduce the time required to execute instructions by minimizing the need to access slower memory locations.

In a CPU, different types of registers are used to perform various functions, such as holding data, addresses, control information, and intermediate results during program execution. The organization of registers varies based on the architecture, but generally, they can be categorized into various types.

Types of Registers

- General-Purpose Registers (GPRs):

- These registers can hold data and addresses and are used to store intermediate results during arithmetic, logic, or data transfer operations.

- Examples:

R0,R1,R2, etc., in ARM processors orAX,BX,CX,DXin x86 architecture. - General-purpose registers are used in most computations and operations, providing flexibility in programming.

- Special-Purpose Registers (SPRs):

- These registers perform specific roles related to control and status functions in the CPU. Examples include:

- Program Counter (PC): Holds the memory address of the next instruction to be executed.

- Instruction Register (IR): Stores the current instruction being executed.

- Stack Pointer (SP): Points to the top of the stack in memory.

- Flags/Status Register: Holds condition codes (flags) set by the ALU, indicating the result of operations (e.g., zero flag, carry flag, sign flag).

- Link Register (LR): Stores return addresses in subroutine calls (used in ARM and other architectures).

- These registers perform specific roles related to control and status functions in the CPU. Examples include:

- Accumulator (ACC):

- The accumulator is used to store intermediate results of arithmetic and logic operations. It plays a central role in single-accumulator architectures where most operations occur in this register.

- Memory Address Register (MAR):

- The MAR holds the address of the memory location that is currently being accessed (either for reading data or writing data).

- Memory Data Register (MDR) or Memory Buffer Register (MBR):

- The MDR temporarily holds data that is being transferred to or from the memory. When reading from memory, the data read is stored in the MDR before being transferred to other parts of the CPU.

- Index Registers:

- These registers hold memory addresses used for indexed addressing modes, allowing efficient data access in loops or arrays.

- Example: The

SI(Source Index) andDI(Destination Index) registers in x86 processors.

- Stack Pointer (SP):

- The stack pointer holds the address of the top of the stack. It is used to manage the call stack during function calls and return operations, as well as to keep track of local variables, function parameters, and return addresses.

- Base Pointer (BP) or Frame Pointer (FP):

- This register points to the base of the current stack frame in memory. It is used to access function parameters and local variables in high-level languages.

Register File

In modern CPUs, a register file is a collection of registers organized as a small memory bank within the processor. The register file enables the CPU to access data quickly without needing to access main memory.

- The register file consists of multiple registers that can be accessed by the processor for different operations.

- The register file size is often fixed in size (e.g., 32 or 64 registers in many architectures).

- In RISC (Reduced Instruction Set Computer) architectures, the register file is typically large (32 or more registers), while CISC (Complex Instruction Set Computer) architectures may have fewer general-purpose registers.

Flag Registers (Status Register)

The status register, also called the flag register, holds the results of comparisons or arithmetic operations. The flags indicate the status of the processor after a specific operation and are used for decision-making in control flow (like branching or looping). Key flags include:

- Zero flag (Z): Set when the result of an operation is zero.

- Carry flag (C): Set when an arithmetic operation generates a carry out or borrow.

- Sign flag (S): Set when the result of an operation is negative.

- Overflow flag (O): Set when an arithmetic overflow occurs (i.e., the result exceeds the capacity of the register).

- Parity flag (P): Set when the number of set bits in the result is even.

Role of Registers in CPU Operations

Registers play a crucial role in the CPU’s fetch-decode-execute cycle:

- Fetch:

- The Program Counter (PC) provides the address of the next instruction, which is fetched from memory and placed in the Instruction Register (IR).

- Decode:

- The Control Unit decodes the instruction in the IR, determining which registers will be used for the operation (e.g., fetching operands from GPRs or setting flags in the Status Register).

- Execute:

- The Arithmetic and Logic Unit (ALU) executes the operation. Operands may be fetched from GPRs, and results are typically stored back into registers like the Accumulator or general-purpose registers.

- Write-back:

- The result of the operation may be written back to memory or kept in a register for further operations.

Register Organization and CPU Architecture

The register organization of a CPU depends on the architecture, with two major types being RISC and CISC:

- RISC (Reduced Instruction Set Computer):

- RISC processors have a large number of general-purpose registers, enabling most operations to take place between registers rather than between memory and registers. This reduces memory access, speeding up the execution.

- Examples: ARM, MIPS processors.

- CISC (Complex Instruction Set Computer):

- CISC processors often have fewer registers and more complex instructions that operate directly on memory, with a wide variety of addressing modes.

- Examples: x86 processors (Intel, AMD).

Example: Register Organization in x86 Architecture

In x86 processors (Intel and AMD), the register organization includes:

- General-purpose registers:

EAX,EBX,ECX,EDX: These are extended 32-bit registers (for 16-bit mode, the lower halves areAX,BX,CX,DX).ESI(Source Index),EDI(Destination Index): Used for string operations and memory access.EBP(Base Pointer),ESP(Stack Pointer): Used for stack operations.

- Segment registers:

CS(Code Segment),DS(Data Segment),SS(Stack Segment), etc., used to point to different segments of memory in real mode or protected mode.

- Special-purpose registers:

EFLAGS: The flags register that contains status bits like carry, zero, sign, and overflow.EIP(Instruction Pointer): Holds the address of the next instruction to be executed.

Example: Register Organization in ARM Architecture

ARM processors have a simpler, more orthogonal register organization, typical of RISC architecture:

- General-purpose registers:

R0toR15(whereR13is typically the Stack Pointer,R14is the Link Register for storing return addresses, andR15is the Program Counter). - CPSR (Current Program Status Register): Holds status flags and control information about the current operating mode and processor state.

3.6 Instruction formats

An instruction format in computer architecture refers to the layout or structure of a machine instruction, detailing how different components (fields) of the instruction are arranged in memory or registers. These fields typically represent the operation code (opcode), operand addresses, and other control information needed for the instruction to execute.

Instruction formats vary across different CPU architectures (RISC, CISC, etc.), but they usually include several common elements. The instruction format determines the instruction size, the number of operands, the type of addressing modes used, and the complexity of the instruction set.

Key Components of an Instruction Format

- Opcode:

- The opcode (operation code) specifies the operation to be performed by the CPU, such as addition, subtraction, load, store, or branching.

- It is usually the first field in the instruction and is critical in determining what action the processor will take.

- Operand(s):

- Operands represent the data or the memory locations (addresses) on which the operation specified by the opcode will act.

- An instruction may have zero, one, two, or more operands, depending on the architecture.

- Operands can be in registers, in memory, or be constants (immediate values).

- Addressing Mode:

- The addressing mode defines how the operands are accessed. Different modes include immediate, direct, indirect, register, and index addressing.

- Some architectures include an addressing mode field in the instruction, while others implicitly assume a specific mode.

- Instruction Length:

- The length of an instruction varies between architectures:

- Fixed-length instructions: All instructions have the same size (common in RISC architectures). This simplifies decoding and improves performance.

- Variable-length instructions: Instruction lengths can vary depending on the operation or the number of operands (common in CISC architectures like x86).

- The length of an instruction varies between architectures:

Types of Instruction Formats

- Zero-Address Format (Stack-based):

- In a zero-address instruction, the instruction does not explicitly specify operands because it operates on data already placed on the stack.

- Example:

PUSH,POP,ADD(with implicit operands from the stack).

[Opcode] - One-Address Format:

- In a one-address instruction, only one operand is explicitly provided, and the other operand is often implied (e.g., in the accumulator register).

- Example: In a typical accumulator-based architecture:

LOAD A(load the value from addressAinto the accumulator).ADD B(add the value from addressBto the accumulator).

[Opcode] [Operand1] - Two-Address Format:

- A two-address instruction specifies two operands. One operand acts as both the source and destination, while the other is the second source operand.

- Example:

ADD R1, R2(add the value ofR2toR1and store the result inR1).

[Opcode] [Operand1] [Operand2] - Three-Address Format:

- In a three-address instruction, all three operands are explicitly specified: two source operands and one destination operand.

- Example:

ADD R1, R2, R3(add the values ofR2andR3and store the result inR1).

[Opcode] [Operand1] [Operand2] [Operand3] - Register-Memory Format:

- In some architectures (like x86), instructions can have a register and a memory address as operands.

- Example:

MOV AX, [BX](move data from the memory location addressed byBXinto registerAX).

[Opcode] [Register] [Memory Address]

Common Instruction Formats in Different Architectures

- RISC (Reduced Instruction Set Computer):

- RISC architectures like ARM and MIPS tend to use fixed-length instruction formats (e.g., 32 bits for each instruction). This simplifies decoding and enables faster instruction execution.

- RISC instruction formats often use three-address instructions for operations like addition and multiplication.

Example RISC instruction format:

[Opcode (6 bits)] [Register1 (5 bits)] [Register2 (5 bits)] [Destination Register (5 bits)] [Shift Amount (5 bits)] [Function (6 bits)] - CISC (Complex Instruction Set Computer):

- CISC architectures like x86 use variable-length instruction formats, where some instructions are just 1 byte long while others may be much longer.

- CISC instructions often include multiple addressing modes and can operate on both memory and registers in the same instruction.

Example x86 instruction format (variable length):

[Opcode] [Mod R/M] [SIB] [Displacement] [Immediate]- Mod R/M: Specifies how operands are accessed (register or memory).

- SIB (Scale-Index-Base): Used for addressing modes involving index registers.

- Displacement: Memory offset.

- Immediate: Constant value used as an operand.

Examples of Instruction Formats

- MIPS Instruction Format (RISC Architecture)MIPS uses three types of instruction formats:

- R-format (used for register operations):

[Opcode (6 bits)] [Rs (5 bits)] [Rt (5 bits)] [Rd (5 bits)] [Shamt (5 bits)] [Function (6 bits)]

- Example:

ADD Rd, Rs, Rt(add values in registersRsandRtand store inRd).

- Example:

- I-format (used for immediate values or load/store instructions):

[Opcode (6 bits)] [Rs (5 bits)] [Rt (5 bits)] [Immediate (16 bits)]

- Example:

LW Rt, offset(Rs)(load word from memory intoRtusing base address inRsplus an immediate offset).

- Example:

- J-format (used for jump instructions):

[Opcode] [Mod R/M] [SIB] [Displacement] [Immediate]

- Example:

J target(jump to the specified target address).

- Example:

- R-format (used for register operations):

- x86 Instruction Format (CISC Architecture)x86 instructions have variable-length formats. A simple instruction to move data from memory to a register can look like this:

[Opcode] [Mod R/M] [SIB] [Displacement] [Immediate]

- Example:

MOV AX, [1234h](move data from memory at address1234hto registerAX).

- Example:

3.7 Addressing modes

Addressing modes in computer architecture refer to the different ways in which the operands of an instruction are specified. These modes allow flexibility in how data is accessed, stored, and manipulated during the execution of instructions.

Addressing modes are essential because they influence how an instruction refers to the location of its operands—whether they are in memory, in registers, or provided as immediate values. The use of addressing modes enables more efficient use of the instruction set and can affect the performance of a program.

Common Addressing Modes

- Immediate Addressing Mode:

- In this mode, the operand is specified directly in the instruction as a constant or literal value.

- The operand is part of the instruction itself.

- Example:

- ADD R1, #5 ; Add the immediate value 5 to the content of register R1

Here,#5is the immediate value.

- ADD R1, #5 ; Add the immediate value 5 to the content of register R1

- Register Addressing Mode:

- The operand is located in a CPU register, and the instruction specifies the register holding the operand.

- This is the fastest addressing mode since accessing registers is quicker than accessing memory.

- Example:

MOV R1, R2 ; Move the value in register R2 to register R1

- Direct (Absolute) Addressing Mode:

- The address of the operand is provided directly in the instruction.

- The instruction contains the memory address where the operand is stored.

- Example:

LOAD R1, 1000 ; Load the content of memory location 1000 into register R1

- Indirect Addressing Mode:

- The address of the operand is stored in a register or memory location, and the instruction specifies the register or memory location that holds this address.

- The content of the specified location or register is used as the effective address of the operand.

- Example:

MOV R1, [R2] ; Move the value from the memory location pointed to by R2 into R1

- Indexed Addressing Mode:

- The effective address of the operand is generated by adding a constant value (index) to the content of a register.

- This is useful for accessing arrays or tables where the base address is stored in a register and the index specifies the offset.

- Example:

LOAD R1, [R2 + 5] ; Load the value from the memory address R2 + 5 into R1

- Base Register Addressing Mode:

- Similar to indexed addressing, but the base register holds the starting address of the memory block, and the offset is typically provided by another register.

- Example:

MOV R1, [BaseReg + OffsetReg] ; Move the value from memory address (BaseReg + OffsetReg) to R1

- Relative Addressing Mode:

- The effective address of the operand is determined by adding a constant value (offset) to the program counter (PC). This is commonly used for branch or jump instructions.

- Example:

JMP Label ; Jump to the instruction at address PC + offset

- Auto-Increment/Auto-Decrement Addressing Mode:

- In auto-increment, the address in the register is used to access memory, and then the address is automatically incremented for the next operation.

- In auto-decrement, the address is decremented before accessing the memory location.

- Example (Auto-Increment):

LOAD R1, [R2]+ ; Load from the memory location pointed to by R2 and then increment R2

Summary Table of Addressing Modes

| Addressing Mode | Operand Specification | Example |

|---|---|---|

| Immediate | Operand is directly specified in the instruction | MOV R1, #5 |

| Register | Operand is in a register | MOV R1, R2 |

| Direct (Absolute) | Address of operand is in the instruction | LOAD R1, 1000 |

| Indirect | Address of operand is in a register/memory | MOV R1, [R2] |

| Indexed | Operand’s address is register + offset | LOAD R1, [R2 + 5] |

| Base Register | Operand’s address is base register + offset | MOV R1, [BaseReg + OffsetReg] |

| Relative | Operand’s address is PC + offset | JMP Label |

| Auto-Increment/Decrement | Operand’s address is adjusted before/after use | LOAD R1, [R2]+ |

3.8 Data transfer and manipulation

In computer systems, data transfer and data manipulation are two fundamental operations that involve moving data between memory, registers, and devices, as well as performing operations on the data to produce desired results. These operations are essential for executing instructions and managing resources in a CPU.

1. Data Transfer

Data transfer refers to the process of moving data between various parts of the computer, such as registers, memory, and input/output devices. Data transfer instructions handle the movement of data between these components without altering the content of the data itself.

Common Data Transfer Instructions

- Move (MOV):

- Moves data from one location (source) to another (destination), such as from one register to another or from memory to a register.

- Example:

MOV R1, R2 ; Move the content of register R2 into register R1

MOV AX, [1000] ; Move the data at memory address 1000 into register AX

- Load (LD):

- Loads data from memory into a register.

- Example:

LOAD R1, [1000] ; Load the data from memory address 1000 into register R1

- Store (ST):

- Stores data from a register into memory.

- Example:

STORE R1, [2000] ; Store the data from register R1 into memory address 2000

- Exchange (XCHG):

- Swaps the contents of two registers or between a register and a memory location.

- Example:

XCHG R1, R2 ; Exchange the contents of register R1 and register R2

- Push:

- Places data on the stack.

- Example:

PUSH R1 ; Push the content of register R1 onto the stack

- Pop:

- Retrieves data from the stack.

- Example:

POP R1 ; Pop the top of the stack into register R1

- Input/Output (IN/OUT):

- Transfers data between the CPU and input/output devices.

- Example:

IN AX, PORT1 ; Input data from PORT1 into register AX

OUT PORT2, AX ; Output data from register AX to PORT2

2. Data Manipulation

Data manipulation refers to operations that modify the content of data, either through arithmetic, logical, or bit manipulation instructions. These instructions perform computations and data adjustments within the CPU.

Common Data Manipulation Instructions

- Arithmetic Operations:

- These involve basic mathematical operations like addition, subtraction, multiplication, and division.

- Add (ADD): Adds two operands and stores the result in the destination.

ADD R1, R2 ; Add the content of R2 to R1 and store the result in R1

- Subtract (SUB): Subtracts the second operand from the first operand.

SUB R1, R2 ; Subtract the content of R2 from R1 and store the result in R1

- Multiply (MUL): Multiplies two operands.

MUL R1, R2 ; Multiply the contents of R1 and R2, result stored in R1

- Divide (DIV): Divides one operand by another.

DIV R1, R2 ; Divide R1 by R2, quotient stored in R1

- Logical Operations:

- These operations manipulate the bits of data and include AND, OR, XOR, and NOT operations.

- AND: Performs a bitwise AND operation on two operands.

AND R1, R2 ; Perform bitwise AND of R1 and R2, store result in R1

- OR: Performs a bitwise OR operation on two operands.

OR R1, R2 ; Perform bitwise OR of R1 and R2, store result in R1

- XOR: Performs a bitwise XOR operation.

XOR R1, R2 ; Perform bitwise XOR of R1 and R2, store result in R1

- NOT: Performs a bitwise negation on the operand.

NOT R1 ; Invert all bits of register R1

- Shift and Rotate Operations:

- Shift instructions move bits in a register to the left or right.

- Rotate instructions circulate the bits around the register.

- Shift Left (SHL): Shifts bits to the left and fills with zeros.

SHL R1, 1 ; Shift all bits in R1 to the left by 1 bit

- Shift Right (SHR): Shifts bits to the right and fills with zeros.

SHR R1, 1 ; Shift all bits in R1 to the right by 1 bit

- Rotate Left (ROL): Rotates bits to the left, with the leftmost bit becoming the rightmost.

ROL R1, 1 ; Rotate all bits in R1 to the left by 1 bit

- Rotate Right (ROR): Rotates bits to the right.

ROR R1, 1 ; Rotate all bits in R1 to the right by 1 bit

- Comparison:

- Compares two operands and sets the condition flags based on the result.

- Example:

CMP R1, R2 ; Compare the contents of R1 and R2, update flags

- Increment and Decrement:

- Increment (INC): Adds 1 to the value of an operand.

INC R1 ; Increment the value in register R1 by 1

- Decrement (DEC): Subtracts 1 from the value of an operand.

DEC R1 ; Decrement the value in register R1 by 1

- Increment (INC): Adds 1 to the value of an operand.

3.9 RISC and CISC

RISC (Reduced Instruction Set Computer) and CISC (Complex Instruction Set Computer) are two major types of CPU architecture design. These architectures differ in how instructions are designed and how the CPU handles them during execution.

RISC (Reduced Instruction Set Computer)

RISC is a CPU design philosophy that focuses on simplifying the instruction set, making each instruction execute in a single clock cycle, thus speeding up the processing. The RISC architecture aims for efficiency and performance by reducing the complexity of instructions and focusing on optimizing the execution of simple operations.

Key Features of RISC:

- Simple Instructions:

- The instruction set contains fewer and simpler instructions. Each instruction is executed in a single clock cycle, making it faster.

- Example: Instead of having complex multiplication instructions, RISC will have simple operations like “ADD” and “SHIFT.”

- Fixed-Length Instructions:

- All instructions are of the same size, which simplifies instruction fetching and decoding.

- Typically, RISC instructions are 32 bits long.

- Load/Store Architecture:

- In RISC, memory access is restricted to specific instructions (like

LOADandSTORE), while most operations are performed on registers. - The CPU can perform operations only on data in registers, and separate instructions are used to move data between memory and registers.

- In RISC, memory access is restricted to specific instructions (like

- Fewer Addressing Modes:

- RISC uses a limited set of addressing modes, often only a few simple ones like register and immediate addressing.

- Pipelining:

- RISC designs are optimized for pipelining, where multiple instructions can be executed in different stages of completion simultaneously.

- Compiler Responsibility:

- The RISC philosophy places more responsibility on the compiler to generate efficient machine code, as instructions are simpler and may need to be combined for complex tasks.

Advantages of RISC:

- High Performance: With simpler instructions, RISC processors can execute more instructions per second.

- Efficient Pipelining: RISC’s simple instructions allow for better utilization of pipelining, reducing the number of cycles per instruction.

- Lower Power Consumption: Because of the simplicity of the instructions, RISC processors generally consume less power.

Disadvantages of RISC:

- Code Size: Since complex operations must be broken down into several simple instructions, RISC programs can require more instructions, leading to larger code size.

- Dependency on Compiler: The compiler needs to be highly efficient to make optimal use of the RISC instruction set.

Examples of RISC Architectures:

- ARM (used in mobile devices)

- MIPS

- SPARC

- RISC-V

CISC (Complex Instruction Set Computer)

CISC is a CPU design philosophy that emphasizes a rich set of complex instructions, which may take multiple clock cycles to execute. CISC processors have a large number of instructions, each of which may perform a multi-step operation like loading data from memory, performing an arithmetic operation, and storing the result back into memory, all in one instruction.

Key Features of CISC:

- Complex Instructions:

- The instruction set contains a large number of complex instructions that can perform multiple tasks in one instruction.

- Example: A single instruction like

MULTcan load two numbers from memory, multiply them, and store the result back in memory.

- Variable-Length Instructions:

- CISC instructions can be of varying lengths, making the instruction fetch and decode processes more complex.

- Fewer Registers:

- Since many operations are performed directly on memory, CISC architectures often have fewer registers compared to RISC.

- Multiple Addressing Modes:

- CISC supports a wide range of addressing modes, providing flexibility in how operands are accessed (direct, indirect, immediate, etc.).

- Microcode Control:

- CISC processors often use microcode to implement complex instructions, translating them into a series of simpler operations that the hardware can execute.

- Memory-to-Memory Operations:

- Unlike RISC, CISC allows instructions to directly manipulate data in memory, without requiring explicit loading and storing in registers.

Advantages of CISC:

- Reduced Program Size: CISC programs tend to have fewer instructions because each instruction can perform more work. This reduces the memory needed for program storage.

- Ease of Programming: Complex instructions make programming easier for assembly language programmers, as fewer instructions are needed for complex tasks.

- Backward Compatibility: CISC processors, like Intel’s x86, maintain compatibility with earlier generations of processors, making them more versatile in supporting legacy software.

Disadvantages of CISC:

- Slower Execution: Complex instructions can take multiple clock cycles to execute, slowing down the processing speed.

- More Power Consumption: The complexity of instructions increases power consumption.

- Pipelining Challenges: The complexity and variable length of instructions make pipelining difficult, reducing the efficiency of executing multiple instructions simultaneously.

Examples of CISC Architectures:

- Intel x86

- IBM System/360

- VAX (Digital Equipment Corporation)

RISC vs CISC: A Comparison

| Feature | RISC | CISC |

|---|---|---|

| Instruction Set | Simple, with fewer instructions | Complex, with many instructions |

| Instruction Execution | Single clock cycle per instruction | Multiple clock cycles per instruction |

| Instruction Length | Fixed length (e.g., 32 bits) | Variable length |

| Memory Operations | Load/store architecture | Memory-to-memory operations allowed |

| Addressing Modes | Few (limited to registers, immediate) | Many addressing modes |

| Registers | More registers | Fewer registers |

| Code Size | Larger code size (more instructions) | Smaller code size (fewer instructions) |

| Pipelining | Easier to implement and more efficient | Harder to implement |

| Compiler Role | Important for optimizing performance | Less reliant on the compiler |

| Power Consumption | Lower power consumption | Higher power consumption |

3.10 64-Bit Processor

A 64-bit processor is a central processing unit (CPU) capable of handling data in 64-bit chunks, which allows it to process more information at once compared to 32-bit processors. This capability enhances performance, especially in tasks that require large data computations.

Key Features of 64-Bit Processors

- Increased Data Handling: By processing 64 bits of data simultaneously, these processors can manage larger numbers and more complex computations more efficiently than 32-bit processors.

- Expanded Memory Addressing: A 64-bit architecture can address significantly more memory than a 32-bit system, enabling support for larger amounts of RAM. This expansion is crucial for applications that demand high memory usage, such as video editing and 3D rendering.

- Enhanced Performance: The ability to handle more data per cycle leads to improved performance in various applications, including scientific computing, database management, and advanced gaming.

Transition from 32-Bit to 64-Bit

The shift from 32-bit to 64-bit processors began in the early 2000s, driven by the need for greater computational power and memory capacity. Initially, 64-bit processors were common in servers and high-performance computing environments. Over time, they became standard in desktops, laptops, and mobile devices.

Compatibility Considerations

While 64-bit processors offer numerous advantages, they require compatible operating systems and software to fully utilize their capabilities. Most modern operating systems, such as Windows, macOS, and Linux, support 64-bit architecture. However, running 32-bit applications on a 64-bit system may require compatibility layers or specific configurations.

Examples of 64-Bit Processors

- Intel Core Series: Intel’s Core i3, i5, i7, and i9 processors have supported 64-bit computing since the introduction of the x86-64 architecture (

).

- AMD Ryzen Series: AMD’s Ryzen processors are built on a 64-bit architecture, offering competitive performance in both desktop and server markets.

- Apple Silicon: Apple’s M-series chips, including the M1, M2, and subsequent models, are 64-bit ARM-based processors designed for high performance and energy efficiency (

).

Checking Your Processor’s Architecture

To determine if your system has a 64-bit processor:

- Windows: Open the System Information window and look for “System Type.” It will indicate “x64-based PC” for 64-bit systems (

).

- macOS: Click on the Apple logo, select “About This Mac,” and review the processor information.

- Linux: Open a terminal and run the command

lscpu. The output will display the architecture type.

Understanding whether your processor is 64-bit is essential for optimizing software performance and ensuring compatibility with modern applications and operating systems.